Apulchra genome assembly

Apulchra genome assembly

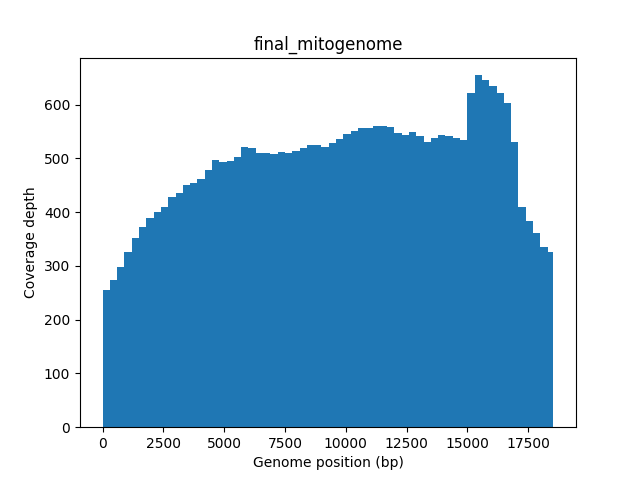

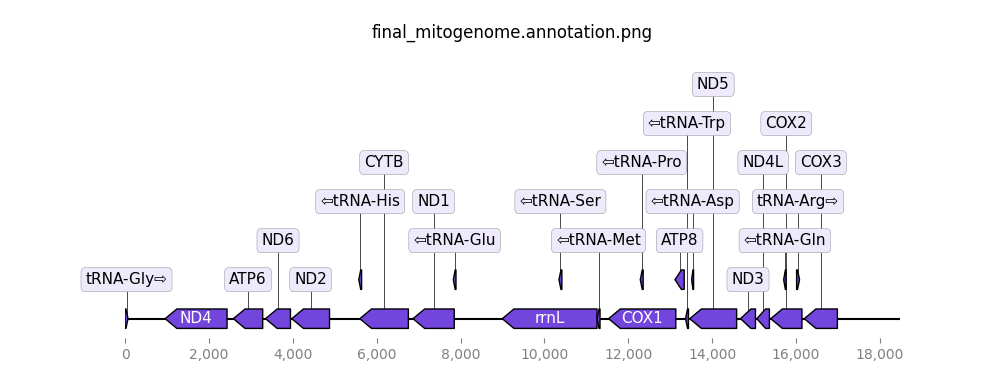

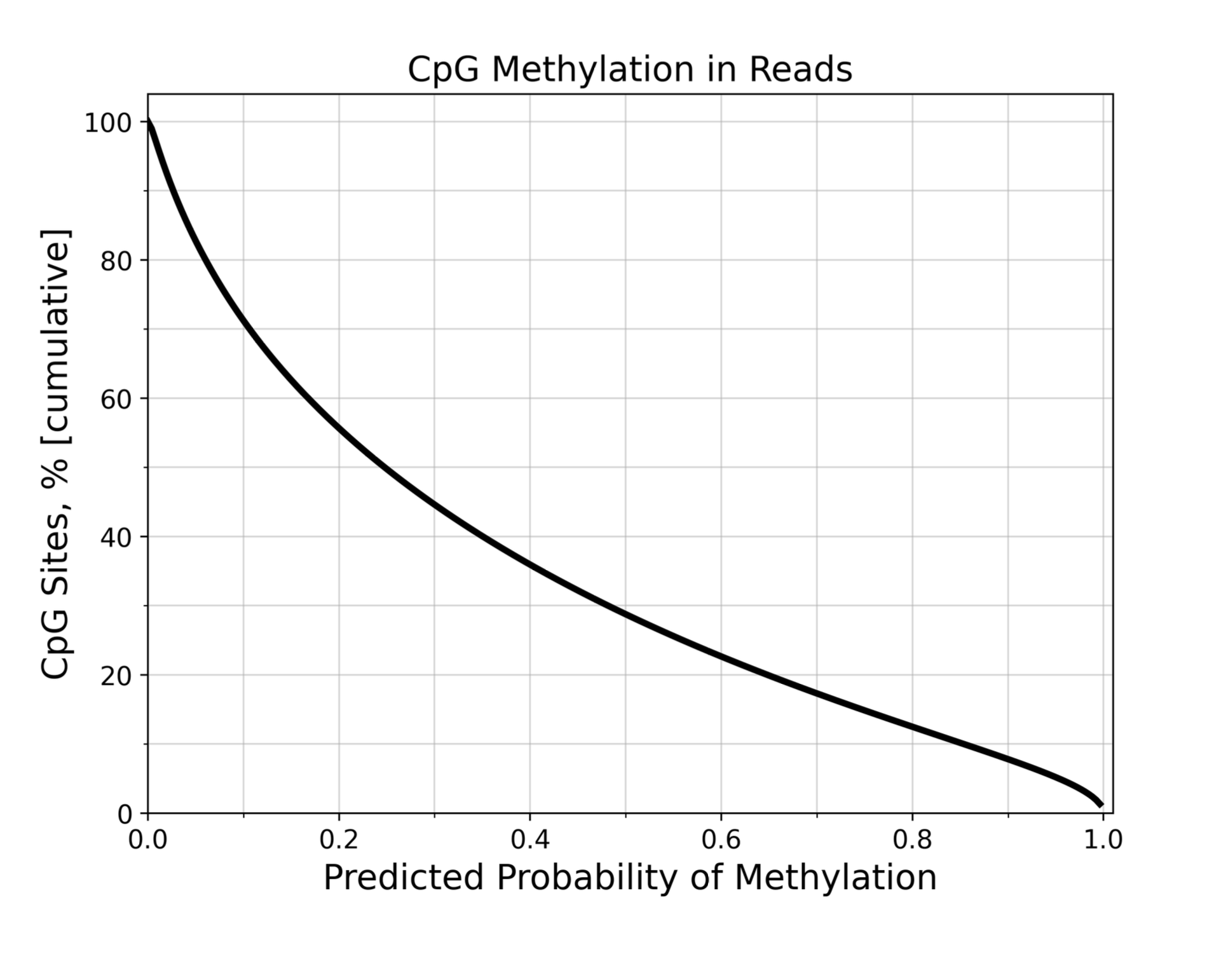

Sperm and tissue from adult Acropora pulchra colonies were collected from Moorea, French Polynesia and sequencing with PacBio (long reads) and Illumina (short reads). This post will detail the genome assembly notes. The github for this project is here.

I’m going to write notes and code chronologically so that I can keep track of what I’m doing each day. When assembly is complete, I will compile the workflow in a separate post.

20240206

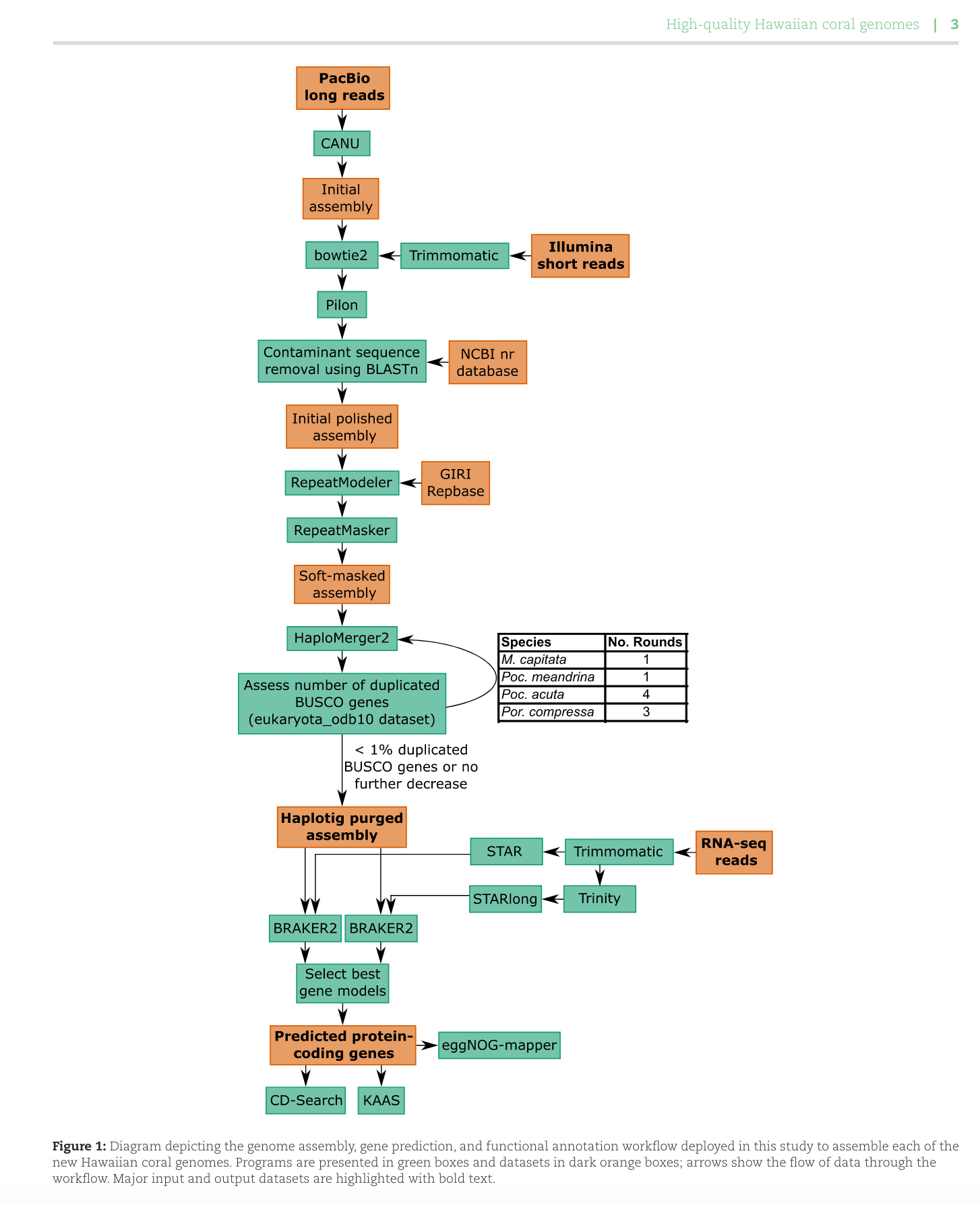

Met w/ Ross and Hollie today re Apulchra genome assembly. We decided to move forward with the workflow from Stephens et al. 2022 which assembled genomes for 4 Hawaiian corals.

PacBio long reads were received in late Jan/early Feb 2024. According to reps from Genohub, the PacBio raw output looks good.

We decided to move forward with Canu to assembly the genome. Canu is specialized to assemble PacBio sequences, operating in three phases: correction, trimming and assembly. According to the Canu website, “The correction phase will improve the accuracy of bases in reads. The trimming phase will trim reads to the portion that appears to be high-quality sequence, removing suspicious regions such as remaining SMRTbell adapter. The assembly phase will order the reads into contigs, generate consensus sequences and create graphs of alternate paths.”

The PacBio files that will be used for assembly are located here on Andromeda: /data/putnamlab/KITT/hputnam/20240129_Apulchra_Genome_LongRead. The files in the folder that we will use are:

m84100_240128_024355_s2.hifi_reads.bc1029.bam

m84100_240128_024355_s2.hifi_reads.bc1029.bam.pbi

The bam file contains all of the read information in computer language and the pbi file is an index file of the bam. Both are needed in the same folder to run Canu.

For Canu, input files must be fasta or fastq format. I’m going to use bam2fastqfrom the PacBio github. This module is not on Andromeda so I will need to install it via conda.

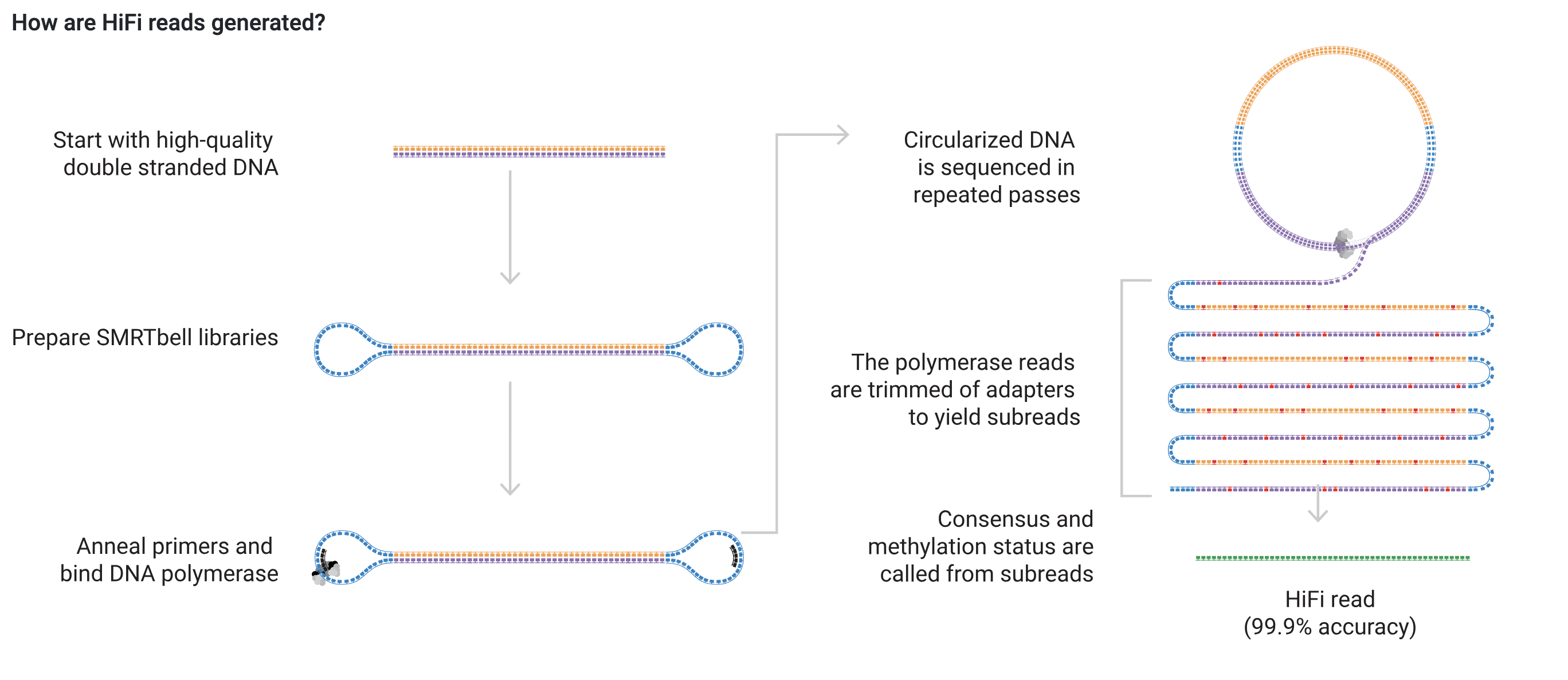

The PacBio sequencing for the Apul genome were done with HiFi sequencing that are produced with circular consensus sequencing on PacoBio long read systems. Here’s how HiFi reads are generated from the PacBio website:

Since Hifi sequencing was used, a specific HiCanu flag (-pacbio-hifi) must be used. Additionally, in the Canu tutorial, it says that if this flag is used, the program is assuming that the reads are trimmed and corrected already. However, our reads are not. I’m going to try to run the first pass at Canu with the -raw flag.

Before Canu, I will run bam2fastq. This is a part of the PacBio BAM toolkit package pbtk. I need to create the conda environment and install the package. Load miniconda module: module load Miniconda3/4.9.2. Create a conda environment.

conda create --prefix /data/putnamlab/conda/pbtk

Install the package. Once the package is installed on the server, anyone in the Putnam lab group can use it.

conda activate /data/putnamlab/conda/pbtk

conda install -c bioconda pbtk

In my own directory, make a new directory for genome assembly

cd /data/putnamlab/jillashey

mkdir Apul_Genome

cd Apul_Genome

mkdir assembly structural functional

cd assembly

mkdir scripts data output

Run code to make the PacBio bam file to a fastq file. In the scripts folder: nano bam2fastq.sh

#!/bin/bash -i

#SBATCH -t 500:00:00

#SBATCH --nodes=1 --ntasks-per-node=1

#SBATCH --export=NONE

#SBATCH --mem=500GB

#SBATCH --mail-type=BEGIN,END,FAIL #email you when job starts, stops and/or fails

#SBATCH --mail-user=jillashey@uri.edu #your email to send notifications

#SBATCH --account=putnamlab

#SBATCH -D /data/putnamlab/jillashey/Apul_Genome/assembly/scripts

#SBATCH -o slurm-%j.out

#SBATCH -e slurm-%j.error

conda activate /data/putnamlab/conda/pbtk

echo "Convert PacBio bam file to fastq file" $(date)

bam2fastq -o /data/putnamlab/jillashey/Apul_Genome/assembly/data/m84100_240128_024355_s2.hifi_reads.bc1029.fastq /data/putnamlab/KITT/hputnam/20240129_Apulchra_Genome_LongRead/m84100_240128_024355_s2.hifi_reads.bc1029.bam

echo "Bam to fastq complete!" $(date)

conda deactivate

Submitted batch job 294235

20240208

Job pended for about a day, then ran in 1.5 hours. I got this error message: bash: cannot set terminal process group (-1): Function not implemented bash: no job control in this shell, but not sure why. A fastq.gz file was produced! The file is pretty large (35G).

less m84100_240128_024355_s2.hifi_reads.bc1029.fastq.fastq.gz

@m84100_240128_024355_s2/261887593/ccs

TATAAGTTTTACAGCTGCCTTTTGCTCAGCAAAGAAAGCAGCATTGTTATTGAACAGAAAAAGCCTTTTGGTGATATAAAGGTTTCTAAGGGACCAAAGTTTGATCTAGTATGCTAAGTGTGGTGGGTTAAAACTTTGTTTCACCTTTTTCCCGTGATGTTACAAAATTGGTGCAAATATTCATGACGTCGTACTCAAGTCTGACACTAAGAGCATGCAATTACTTAAACAAACAAGCCATACACCAATAAACTGAAGCTCTGTCAACTAGAAAACCTTTGAGTATTTTCATTGTAAAGTACAAGTGGTATATTGTCACTTGCTTTTACAACTTGAAGAACTCACAGTTAAGTTACTAGATTCACCATAGTGCTTGGCAATGAAGAAGCCAAATCACATAAAGTCGGAGCATGTGGTGTTTAGACCTAATCAAACAAGAACACAATATTTAGTACCTGCATCCTGTCTAAGGAGGAAATTTTAAGCTGCTTTCTTTTAAATTTTTTTTATTAGCATTTCAATGGTTGAGGTCGATTATAGGTGCTAGGCTTTAATTCCGTACTATGAAAGAAGAAAGGTCGTTGTTATTAACCATGTCAAACAGAGAAACACATGGTAAAAAATTGACTTCCTTTTCCTCTCGTTGCCACTTAAGCTTAATGATGGTGTTTGACCTGAAAGATGTTACAATTGTTTTAGATGAAAAGACTGTTCTGCGTAAAACAGTGAAGCCTCCCAACTTATTTTGTTATGTGGATTTTGTTGTCTTGTTAGTAACATGTATTGGACTATCTTTTGTGAGTACATAGCTTTTTTTCCATCAACTGACTATATACGTGGTGTAATTTGAGATCATGCCTCCAAGTGTTAGTCTTTTGTTTGGGGCTAACTCGTAAAAGACAAAGGGAGGGGGGTTGTCTAATTCCTAAGCAAAGCATTAAGTTTAACACAGGAAATTGTTTGCGTTGATATTGCTATCCTTTCAGCCCCAAACAAAAAATTTAATGGTTATTTTATTTTACATCTATTGTAAATATATTTTAACATTAATTTTTATTATTGCACTGTAAATACTTGTACTAATGTTCTGTTTGAATTAATTTTGATTCATTCCTTGTGCTTACAACAACAGGGATACAAAACCGATATGTATAATAATACTATTAGAGATGCTTATTTGCATTTTTAGCCCATACCATGAGTTTTAATAACGCCAGGCCATTGGAGATTTTATGGAGTGAGGATTCATTGTACAAACATGGTTGATTTAATATTAAAGTTGTATCCAAATAATTAATATCTGCTGTGATCAGTGAAAGATTGACCTTTCAGTTGTTTGGTTGCACCTTCATCTTATTGGAAACAACTGAATGGAGCATCTTTCCAGTTTAAAAATGTACCACTGCCCACTTTCATGAAGTTATGCCACATATTAATAATGACTATTAATTGTTGAAAACCCTTCTTCCAAAATGTTTCCATTTATTTGTAATAGCATATGTGGTCCATCAGAACAATAATTTAAATCATTACTATTAATAATTTTCCAATAACTGACTTTCAAACCTAGCCAACAAGCATAAGTCAGTAAGCCACAGAGTCCAGAGATACACTTACACTTTACTTTTCACTTCTGAAACATTTTATAATCTCAGTATGAGCATAGAACTTTTCAGTTGGGCAGCATGGAATAGAACCTTTGGACCCCTCTGTGAATATCAAAAATAGGCAACCACTTCCAGCATACACTCTAGCCTCCTTCATAAAGCAAGCCTTAGTGTTTTAGCTTCTACTAGTTAGATTCATTTTAAAAGAAGTTCAGTATACTTAATCTTATAGAAGCTGATTGTGATATAATTGCATAGGTGGATCTCAGAAAAGTGAGATGTAGCTGTCAAATTAAAAGAAGTCCTTTCCAAGCGTAGCTTCTGATAAACAATGCATTTTAGTTAACATTGGATTATGGTTTCAAAGGACTTGTAAAGCTAAATTCAAGTTTTTATGACAACTTGAAAGCCTTTGCCACAGTCTCCGCTGATTTAAGACTTCCATCAAAGTTAGAGTGGTGTGAATGCATCTCCACATGCAATTAATAAAGGTGAGGCAGACAACACAAAACACCCTGGTGCACCATCAACTCCACGGATCACTTGACTGTAACGCCATCTTATACAGCGACTGCCAATTGGAACTGGAAGATCAGGAATGATCTCTTTCACATGGGAAATGAGCATGGTCTTGATAATGCTTTCATCAGTATCCCAATGTTGAAGACAGAAGGACTTTGTTGAATGTACTAAAAGAGAGGGACCAACATCCACTGGATCTGGAACAGAATGCCAAAGCATATGCAAAATGTTATCATGTATAATAAAGAAAAAACCAAACTCATGAAGTTCAACGGTCACAGGCTTTGGTAAAAGACTGTACATGGTAAAGTACTCAGGGTGAAAGTTTGTATCCAAAAAACGAAAAGCTAACCTGTACCACATCTCTTCCGAGAGTCCACTGACACAAATGCAATATTAGGATTGCCAGTCGCATATTTACACGTCCATGGCACATTGATAACTGCTTCATGATCAAAAAAGTATCCTACTGCAAACCGTGATGAATATTCCACATTTTGTAAGGATTTGATTTCATTTTGTAAGAATGCTTGAATTGAACCTTGTAGCTGAAGAAGTTGTGGTACTGGAATAGTTACTATGACTGA

See how many @m84100 are in the file. I’m not sure what these stand for, maybe contigs?

zgrep -c "@m84100" m84100_240128_024355_s2.hifi_reads.bc1029.fastq.fastq.gz

5898386

More than 5 million, so many not contigs? I guess it represents the number of HiFi reads generated. Now time to run Canu! Canu is already installed on the server, which is nice.

In the scripts folder: nano canu.sh

#!/bin/bash

#SBATCH -t 500:00:00

#SBATCH --nodes=1 --ntasks-per-node=1

#SBATCH --export=NONE

#SBATCH --mem=500GB

#SBATCH --mail-type=BEGIN,END,FAIL #email you when job starts, stops and/or fails

#SBATCH --mail-user=jillashey@uri.edu #your email to send notifications

#SBATCH --account=putnamlab

#SBATCH -D /data/putnamlab/jillashey/Apul_Genome/assembly/scripts

#SBATCH -o slurm-%j.out

#SBATCH -e slurm-%j.error

module load canu/2.2-GCCcore-11.2.0

cd /data/putnamlab/jillashey/Apul_Genome/assembly/data

echo "Unzip paco-bio fastq file" $(date)

gunzip m84100_240128_024355_s2.hifi_reads.bc1029.fastq.fastq.gz

echo "Unzip complete, starting assembly" $(date)

canu -p apul -d /data/putnamlab/jillashey/Apul_Genome/assembly/data genomeSize=475m -raw -pacbio-hifi m84100_240128_024355_s2.hifi_reads.bc1029.fastq.fastq

echo "Canu assembly complete" $(date)

I’m not sure if the raw and -pacbio-hifi will be compatible, as the Canu tutorial says that the -pacbio-hifi assumes that the input is trimmed and corrected (still not sure what this means). Submitted batch job 294325

20240212

The canu script appears to have ran. The script canu.sh itself ran in ~40 mins, but it spawned 10000s of other jobs on the server (for parallel processing, I’m guessing). Since I didn’t start those jobs, I didn’t get emails when they finished, so I just had to check the server every few hours. It took about a day for the rest of the jobs to finish. First, I’m looking at the slurm-294325.error output file. There’s a lot in this file, but I will break it down.

It first provides details on the slurm support and associated memory, as well as the number of threads that each portion of canu will need to run.

-- Slurm support detected. Resources available:

-- 25 hosts with 36 cores and 124 GB memory.

-- 3 hosts with 24 cores and 124 GB memory.

-- 1 host with 36 cores and 123 GB memory.

-- 1 host with 48 cores and 753 GB memory.

-- 8 hosts with 36 cores and 61 GB memory.

-- 1 host with 48 cores and 250 GB memory.

-- 2 hosts with 36 cores and 250 GB memory.

-- 2 hosts with 48 cores and 375 GB memory.

-- 4 hosts with 36 cores and 502 GB memory.

--

-- (tag)Threads

-- (tag)Memory |

-- (tag) | | algorithm

-- ------- ---------- -------- -----------------------------

-- Grid: meryl 12.000 GB 6 CPUs (k-mer counting)

-- Grid: hap 12.000 GB 12 CPUs (read-to-haplotype assignment)

-- Grid: cormhap 13.000 GB 12 CPUs (overlap detection with mhap)

-- Grid: obtovl 8.000 GB 6 CPUs (overlap detection)

-- Grid: utgovl 8.000 GB 6 CPUs (overlap detection)

-- Grid: cor -.--- GB 4 CPUs (read correction)

-- Grid: ovb 4.000 GB 1 CPU (overlap store bucketizer)

-- Grid: ovs 16.000 GB 1 CPU (overlap store sorting)

-- Grid: red 10.000 GB 6 CPUs (read error detection)

-- Grid: oea 8.000 GB 1 CPU (overlap error adjustment)

-- Grid: bat 64.000 GB 8 CPUs (contig construction with bogart)

-- Grid: cns -.--- GB 8 CPUs (consensus)

--

-- Found trimmed raw PacBio HiFi reads in the input files.

The file also says that it skipped the correction and trimming steps. This indicates that adding the -raw flag didn’t work.

-- Stages to run:

-- assemble HiFi reads.

--

--

-- Correction skipped; not enabled.

--

-- Trimming skipped; not enabled.

Two histograms are then presented. The first is a histogram of correct reads:

-- In sequence store './apul.seqStore':

-- Found 5897694 reads.

-- Found 79182880880 bases (166.7 times coverage).

-- Histogram of corrected reads:

--

-- G=79182880880 sum of || length num

-- NG length index lengths || range seqs

-- ----- ------------ --------- ------------ || ------------------- -------

-- 00010 26133 264284 7918288640 || 1150-2287 8297|--

-- 00020 22541 592551 15836596549 || 2288-3425 76768|-------------

-- 00030 20184 964605 23754880672 || 3426-4563 316378|---------------------------------------------------

-- 00040 18285 1377072 31673163019 || 4564-5701 397324|---------------------------------------------------------------

-- 00050 16551 1832151 39591448183 || 5702-6839 374507|------------------------------------------------------------

-- 00060 14786 2337786 47509730799 || 6840-7977 351843|--------------------------------------------------------

-- 00070 12854 2910882 55428020435 || 7978-9115 339864|------------------------------------------------------

-- 00080 10612 3585762 63346313974 || 9116-10253 340204|------------------------------------------------------

-- 00090 7724 4449769 71264594524 || 10254-11391 341160|-------------------------------------------------------

-- 00100 1150 5897693 79182880880 || 11392-12529 343170|-------------------------------------------------------

-- 001.000x 5897694 79182880880 || 12530-13667 340043|------------------------------------------------------

-- || 13668-14805 336214|------------------------------------------------------

-- || 14806-15943 328927|-----------------------------------------------------

-- || 15944-17081 316290|---------------------------------------------------

-- || 17082-18219 293393|-----------------------------------------------

-- || 18220-19357 261212|------------------------------------------

-- || 19358-20495 225351|------------------------------------

-- || 20496-21633 188701|------------------------------

-- || 21634-22771 154123|-------------------------

-- || 22772-23909 124842|--------------------

-- || 23910-25047 99659|----------------

-- || 25048-26185 78280|-------------

-- || 26186-27323 61530|----------

-- || 27324-28461 47760|--------

-- || 28462-29599 37668|------

-- || 29600-30737 29339|-----

-- || 30738-31875 22548|----

-- || 31876-33013 17292|---

-- || 33014-34151 13055|---

-- || 34152-35289 9797|--

-- || 35290-36427 7251|--

-- || 36428-37565 5005|-

-- || 37566-38703 3560|-

-- || 38704-39841 2474|-

-- || 39842-40979 1553|-

-- || 40980-42117 1006|-

-- || 42118-43255 604|-

-- || 43256-44393 334|-

-- || 44394-45531 184|-

-- || 45532-46669 102|-

-- || 46670-47807 45|-

-- || 47808-48945 18|-

-- || 48946-50083 11|-

-- || 50084-51221 4|-

-- || 51222-52359 1|-

-- || 52360-53497 2|-

-- || 53498-54635 0|

-- || 54636-55773 0|

-- || 55774-56911 0|

-- || 56912-58049 1|-

There’s also a histogram of corrected-trimmed reads, but it is the exact same as the histogram above. The histogram represents the length ranges for each sequence and the number of sequences that have that length range. For example, if we look at the top row, there are 8297 sequences that range in length from 1150-2287 bp.

It looks like canu did run jobs by itself:

-- For 5897694 reads with 79182880880 bases, limit to 791 batches.

-- Will count kmers using 16 jobs, each using 13 GB and 6 threads.

--

-- Finished stage 'merylConfigure', reset canuIteration.

--

-- Running jobs. First attempt out of 2.

--

-- 'meryl-count.jobSubmit-01.sh' -> job 294326 tasks 1-16.

--

----------------------------------------

-- Starting command on Thu Feb 8 14:32:12 2024 with 8923.722 GB free disk space

cd /glfs/brick01/gv0/putnamlab/jillashey/Apul_Genome/assembly/data

sbatch \

--depend=afterany:294326 \

--cpus-per-task=1 \

--mem-per-cpu=4g \

-D `pwd` \

-J 'canu_apul' \

-o canu-scripts/canu.01.out canu-scripts/canu.01.sh

-- Finished on Thu Feb 8 14:32:13 2024 (one second) with 8923.722 GB free disk space

Let’s look at the output files that canu produced in /data/putnamlab/jillashey/Apul_Genome/assembly/data. I’ll be using the Canu tutorial output information to understand outputs.

-rwxr-xr-x. 1 jillashey 1.1K Feb 8 14:13 apul.seqStore.sh

-rw-r--r--. 1 jillashey 951 Feb 8 14:31 apul.seqStore.err

drwxr-xr-x. 3 jillashey 4.0K Feb 8 14:32 apul.seqStore

drwxr-xr-x. 9 jillashey 4.0K Feb 8 23:57 unitigging

drwxr-xr-x. 2 jillashey 4.0K Feb 9 01:36 canu-scripts

lrwxrwxrwx. 1 jillashey 24 Feb 9 01:36 canu.out -> canu-scripts/canu.09.out

drwxr-xr-x. 2 jillashey 4.0K Feb 9 01:36 canu-logs

-rw-r--r--. 1 jillashey 23K Feb 9 01:47 apul.report

-rw-r--r--. 1 jillashey 7.0M Feb 9 01:51 apul.contigs.layout.tigInfo

-rw-r--r--. 1 jillashey 155M Feb 9 01:51 apul.contigs.layout.readToTig

-rw-r--r--. 1 jillashey 2.8G Feb 9 01:57 apul.unassembled.fasta

-rw-r--r--. 1 jillashey 943M Feb 9 02:01 apul.contigs.fasta

The apul.report file will provide information about the analysis during assembly, including histogram of read lengths, the histogram or k-mers in the raw and corrected reads, the summary of corrected data, summary of overlaps, and the summary of contig lengths. The histogram of read lengths is the same as in the error file above. There is also a histogram (?) of the mer information:

-- 22-mers Fraction

-- Occurrences NumMers Unique Total

-- 1- 1 0 0.0000 0.0000

-- 2- 2 4316301 **** 0.0150 0.0002

-- 3- 4 855268 0.0172 0.0002

-- 5- 7 183637 0.0183 0.0002

-- 8- 11 62578 0.0187 0.0002

-- 12- 16 29616 0.0188 0.0002

-- 17- 22 22278 0.0189 0.0003

-- 23- 29 20222 0.0190 0.0003

-- 30- 37 28236 0.0190 0.0003

-- 38- 46 73803 0.0192 0.0003

-- 47- 56 556391 0.0194 0.0003

-- 57- 67 6166302 ****** 0.0218 0.0010

-- 68- 79 35800144 *************************************** 0.0477 0.0098

-- 80- 92 63190280 ********************************************************************** 0.1835 0.0633

-- 93- 106 26607016 ***************************** 0.3988 0.1607

-- 107- 121 2495822 ** 0.4799 0.2024

-- 122- 137 2376701 ** 0.4871 0.2066

-- 138- 154 16117515 ***************** 0.4965 0.2131

-- 155- 172 47510630 **************************************************** 0.5575 0.2605

-- 173- 191 41907409 ********************************************** 0.7262 0.4060

-- 192- 211 9190117 ********** 0.8650 0.5375

-- 212- 232 1806623 ** 0.8934 0.5669

-- 233- 254 4028020 **** 0.8997 0.5743

-- 255- 277 3875382 **** 0.9140 0.5926

-- 278- 301 1456651 * 0.9271 0.6107

-- 302- 326 1905710 ** 0.9319 0.6181

-- 327- 352 3003944 *** 0.9388 0.6293

-- 353- 379 1723658 * 0.9491 0.6477

-- 380- 407 968475 * 0.9549 0.6587

-- 408- 436 1249856 * 0.9583 0.6657

-- 437- 466 890757 0.9626 0.6752

-- 467- 497 768714 0.9656 0.6824

-- 498- 529 937920 * 0.9683 0.6892

-- 530- 562 618907 0.9716 0.6978

-- 563- 596 582755 0.9737 0.7039

-- 597- 631 535408 0.9757 0.7100

-- 632- 667 441122 0.9775 0.7159

-- 668- 704 459953 0.9791 0.7211

-- 705- 742 367266 0.9807 0.7268

-- 743- 781 354455 0.9819 0.7316

-- 782- 821 304354 0.9832 0.7365

There are 22-mers. A k-mer are substrings of length k contained in a biological sequence. For example, the term k-mer refers to all of a sequence’s subsequences of length k such that the sequence AGAT would have four monomers (A, G, A, and T), three 2-mers (AG, GA, AT), two 3-mers (AGA and GAT) and one 4-mer (AGAT). So if we have 22-mers, we have subsequences of 22 nt? The Canu documentation says that k-mer histograms with more than 1 peak likely indicate a heterozygous genome. I’m not sure if the stars represent peaks or counts but if this is a histogram of k-mer information, it has two peaks, indicating a heterozygous genome.

The Canu documentation states that corrected read reports should be given with information about number of reads, coverage, N50, etc. My log file does not have this information, likely because the trimming and correcting steps were not performed. Instead, I have this information:

-- category reads % read length feature size or coverage analysis

-- ---------------- ------- ------- ---------------------- ------------------------ --------------------

-- middle-missing 4114 0.07 10652.45 +- 6328.73 1185.95 +- 2112.86 (bad trimming)

-- middle-hump 4148 0.07 11485.92 +- 4101.92 4734.88 +- 3702.70 (bad trimming)

-- no-5-prime 8533 0.14 9311.86 +- 5288.80 2087.03 +- 3315.79 (bad trimming)

-- no-3-prime 10888 0.18 7663.24 +- 5159.57 1677.51 +- 3090.22 (bad trimming)

--

-- low-coverage 48831 0.83 6823.01 +- 3725.15 16.35 +- 15.52 (easy to assemble, potential for lower quality consensus)

-- unique 5419159 91.89 9403.44 +- 4761.57 110.87 +- 40.33 (easy to assemble, perfect, yay)

-- repeat-cont 93801 1.59 7730.76 +- 4345.55 1001.60 +- 665.30 (potential for consensus errors, no impact on assembly)

-- repeat-dove 380 0.01 23076.97 +- 3235.06 878.08 +- 510.47 (hard to assemble, likely won't assemble correctly or eve

n at all)

--

-- span-repeat 64724 1.10 11397.59 +- 5058.57 2335.58 +- 2836.91 (read spans a large repeat, usually easy to assemble)

-- uniq-repeat-cont 182925 3.10 9764.80 +- 4218.80 (should be uniquely placed, low potential for consensus e

rrors, no impact on assembly)

-- uniq-repeat-dove 14288 0.24 17978.89 +- 4847.85 (will end contigs, potential to misassemble)

-- uniq-anchor 19659 0.33 11312.36 +- 4510.86 5442.58 +- 3930.94 (repeat read, with unique section, probable bad read)

I’m not sure why I’m getting all of this information or what it means. There is a high % of unique reads in the data which is good. In the file, there is also information about edges (not sure what this means), as well as error rates. May discuss further with Hollie.

The Canu output documentation says that I’m supposed to get a file with corrected and trimmed reads but I don’t have those. I do have apul.unassembled.fasta and apul.contigs.fasta.

head apul.unassembled.fasta

>tig00000838 len=19665 reads=4 class=unassm suggestRepeat=no suggestBubble=no suggestCircular=no trim=0-19665

TAAAAACATTGATTCTTGTTTCAATATGAGACTTGTTTCGGAAGATGTTCGCGCAGGTTACATTTCATAATCCACAAGAAATGCGACATCGCCAACCTTA

CTTTAGTGTTTGCATTTAAGCAAAACAATGATAAAGAAACAAATCTCACATCTCGCAAAAGTATGCATTCTATGAAGAACAATGAAAATTAATGAAAGTG

AATCTTACACCTCCTATTCAAGACGCCGCATTAATTCAACTTGTTGATTTCTCCTAAAACGCTTTCTTTTAGAAGGCTTTCTTAGTCTTTCAATTGTAAA

GAATACATAAAGGACTCATGACCACTTATGTTCTTAAGTGTTACTGCTGCTTTAAAACACGTTACAAACCACATGTGAATATAGTTGCGGCACAGAAGGG

AAAATCGCTGAAATGCTGTCCAAATATACACAATATCATTAAGTAAAGTACGATCGTCCGGGTGAGTGTAGTCCTGAGAAGGACTGTTTGAGATGACATT

GACTGACGTTTCGACAACCTGAGCGGAAGTCATCTTCAGAGTCATCTTCACTTGACTCTGAAGATGACTTCCGCTCAGGTTGTCGAAACGTCAGTCAATG

TCATCTCAAACAGTCCTTCTCAGGACTACACTCACCCGGACGATCGTACTTTACTTAATGATATGACTCCTGGGTTCAAACCATTTACAAATATACACAA

GTTTGAAAGATCATATCGCCTGCCAGTTTTACAACTCGTCTTAGACACAATGGAATACAAAACCCTACCGAACGAATACCTTTGATTGAGATTTATGAAT

GTGAAACAGCGACTTCGAGAGAAAAACGAATTCTTAAAAATGCAGTTCAACTCTATCGTCATTCAACTGAAGCCAGCCGTGGCTCGGCTTCACAAGAAAG

head apul.contigs.fasta

>tig00000004 len=43693 reads=3 class=contig suggestRepeat=no suggestBubble=yes suggestCircular=no trim=0-43693

TACAATTTTAGAACACGGGACCAGCTTAGCATAATAGCTTCACCTTTCGTCTATCTAACTCTAGGAAGTTTTAATTTTTTCAAGTATTATAAAGGGCTCC

GTCGACTGTCAAAGATTTGCCTTTTCAAGCTCCAATGGAAACTGTAAAAGTTGCGTATTTTTACGAGCTTGAAACGCATCTTGGTATGCCCGATATCAAG

TCGAAATTAATATTGAGATAATCCTTTGGCCTTCTTCTATCAACATCTGAAATAAAAATCTGGGCAGTTTGAACGCGCTCTATCAAACATAACAGATTTG

AAGGTAGGTGATAACTTATTTGCATAATCTACGTTAACAAAAAGTCTATTTATAGAATGACTACTCGGCATATTTCTAACAGTGGTACTTCAGATACGTT

TTGATGGACTTATTATTCTGTCGTTTGTATTGTTTTCTTCAATTATTTAGCCTTAATAATTCCAAATAATAAAGAAATAAGGAAAGTCTTTGGTGTAAGT

CACACTCAAAAGGTGAGTTTCAACAGTTACTGAACACCCTTACGTATTAAACAGTCATTTCAATTTCCAGATTCTAACAGAAAATGTCAAATCGTTGTTT

TATAGTAGAAATCCATCTTCAAAAGTTATTCCCCGCTTATGCAGGCTTGATTCTCGCGGCTCTTTCCAGCTCGGTTTACAATATAAGACACCGGTGCAGA

TACCATTGAACTTGTAAACAATGTCACGCAAATTAAACTGTACTTCAATTTGCAAGCCATACAGCTTTAAGTCAGGTCTTTATTGAACTTTCTAAGTCAA

GGTTGGGGAATATAAAGATATTTTATTACCAGTATATTTTCGGTGAAAATTACAACGGATACATGTTATGGGCCTGTTCTTTAAACTCAGTTACATACAT

The unassembled file contains the reads and low-coverage contigs which couldn’t be incorporated into the primary assembly. The contigs file contains the full assembly, including unique, repetitive, and bubble elements. What does this mean? Unsure, but the header line provides metadata on each sequence.

len

Length of the sequence, in bp.

reads

Number of reads used to form the contig.

class

Type of sequence. Unassembled sequences are primarily low-coverage sequences spanned by a single read.

suggestRepeat

If yes, sequence was detected as a repeat based on graph topology or read overlaps to other sequences.

suggestBubble

If yes, sequence is likely a bubble based on potential placement within longer sequences.

suggestCircular

If yes, sequence is likely circular. The fasta line will have a trim=X-Y to indicate the non-redundant coordinates

If we are looking at this header: >tig00000004 len=43693 reads=3 class=contig suggestRepeat=no suggestBubble=yes suggestCircular=no trim=0-43693, the length is the contig is 43693 bp, 3 reads were used to form the contig, class is a contig, no repeats used to form the contig, the sequence is likely a bubble based on placement within longer sequences, the sequence is not circular, and the entire contig is non-redundant. Not sure what bubble means…

Because Canu didn’t trim or correct the Hifi reads, I may need to use a different assembly tool. I’m going to try Hifiasm, which is a fast haplotype-resolved de novo assembler designed for PacBio HiFi reads. According to Hifiasm github, here are some good reasons to use Hifiasm:

-

Hifiasm delivers high-quality telomere-to-telomere assemblies. It tends to generate longer contigs and resolve more segmental duplications than other assemblers.

-

Hifiasm can purge duplications between haplotigs without relying on third-party tools such as purge_dups. Hifiasm does not need polishing tools like pilon or racon, either. This simplifies the assembly pipeline and saves running time.

-

Hifiasm is fast. It can assemble a human genome in half a day and assemble a ~30Gb redwood genome in three days. No genome is too large for hifiasm.

-

Hifiasm is trivial to install and easy to use. It does not required Python, R or C++11 compilers, and can be compiled into a single executable. The default setting works well with a variety of genomes.

If I use this tool, I may not need to pilon to polish the assembly. This tool isn’t on the server, so I’ll need to create a conda environment and install the package.

cd /data/putnamlab/conda

mkdir hifiasm

cd hifiasm

module load Miniconda3/4.9.2

conda create --prefix /data/putnamlab/conda/hifiasm

conda activate /data/putnamlab/conda/hifiasm

conda install -c bioconda hifiasm

Once this package is installed, run code for hifiasm assembly. In the scripts folder: nano hifiasm.sh

#!/bin/bash -i

#SBATCH -t 500:00:00

#SBATCH --nodes=1 --ntasks-per-node=1

#SBATCH --export=NONE

#SBATCH --mem=500GB

#SBATCH --mail-type=BEGIN,END,FAIL #email you when job starts, stops and/or fails

#SBATCH --mail-user=jillashey@uri.edu #your email to send notifications

#SBATCH --account=putnamlab

#SBATCH -D /data/putnamlab/jillashey/Apul_Genome/assembly/scripts

#SBATCH -o slurm-%j.out

#SBATCH -e slurm-%j.error

conda activate /data/putnamlab/conda/hifiasm

cd /data/putnamlab/jillashey/Apul_Genome/assembly/data

echo "Starting assembly with hifiasm" $(date)

hifiasm -o apul.hifiasm m84100_240128_024355_s2.hifi_reads.bc1029.fastq.fastq

echo "Assembly with hifiasm complete!" $(date)

conda deactivate

Submitted batch job 300534

20240213

Even though the reads were not trimmed or corrected with Canu, I am going to run Busco on the output. This will provide information about how well the genome was assembled and its completeness based on evolutionarily informed expectations of gene content from near-universal single-copy orthologs. Danielle and Kevin have both run BUSCO before and used similar scripts but I think I’ll adapt mine a little to fit my needs and personal preferences for code.

From the Busco user manual, the mandatory parameters are -i, which defines the input fasta file and -m, which sets the assessment mode (in our case, genome). Some recommended parameters incude l (specify busco lineage dataset; in our case, metazoans), c (specify number of cores to use), and -o (assigns specific label to output).

In /data/putnamlab/shared/busco/scripts, the script busco_init.sh has information about the modules to load and in what order. Both Danielle and Kevin sourced this file specifically in their code, but I will probably just copy and paste the modules. In the same folder, they also used busco-config.ini as input for the --config flag in busco, which provides a config file as an alternative to command line parameters. I am not going to use this config file (yet), as Danielle and Kevin were assembling transcriptomes and I’m not sure what the specifics of the file are (or what they should be for genomes). In /data/putnamlab/shared/busco/downloads/lineages/metazoa_odb10, there is information about the metazoan database.

In /data/putnamlab/jillashey/Apul_Genome/assembly/scripts, nano busco_canu.sh

#!/bin/bash

#SBATCH -t 500:00:00

#SBATCH --nodes=1 --ntasks-per-node=15

#SBATCH --export=NONE

#SBATCH --mem=250GB

#SBATCH --mail-type=BEGIN,END,FAIL #email you when job starts, stops and/or fails

#SBATCH --mail-user=jillashey@uri.edu #your email to send notifications

#SBATCH --account=putnamlab

#SBATCH -D /data/putnamlab/jillashey/Apul_Genome/assembly/scripts

#SBATCH -o slurm-%j.out

#SBATCH -e slurm-%j.error

module load BUSCO/5.2.2-foss-2020b

module load BLAST+/2.11.0-gompi-2020b

module load AUGUSTUS/3.4.0-foss-2020b

module load SEPP/4.4.0-foss-2020b

module load prodigal/2.6.3-GCCcore-10.2.0

module load HMMER/3.3.2-gompi-2020b

cd /data/putnamlab/jillashey/Apul_Genome/assembly/data

echo "Begin busco on canu-assembled fasta" $(date)

busco -i apul.contigs.fasta -m genome -l /data/putnamlab/shared/busco/downloads/lineages/metazoa_odb10 -c 15 -o apul.busco.canu

echo "busco complete for canu-assembled fasta" $(date)

Submitted batch job 301588. This failed and gave me some errors. This one seemed to have been the fatal one: Message: BatchFatalError(AttributeError("'NoneType' object has no attribute 'remove_tmp_files'")). Danielle ran into a similar error in her busco code so I am going to try to set the --config file as "$EBROOTBUSCO/config/config.ini".

In the script, nano busco_canu.sh

#!/bin/bash

#SBATCH -t 500:00:00

#SBATCH --nodes=1 --ntasks-per-node=15

#SBATCH --export=NONE

#SBATCH --mem=250GB

#SBATCH --mail-type=BEGIN,END,FAIL #email you when job starts, stops and/or fails

#SBATCH --mail-user=jillashey@uri.edu #your email to send notifications

#SBATCH --account=putnamlab

#SBATCH -D /data/putnamlab/jillashey/Apul_Genome/assembly/scripts

#SBATCH -o slurm-%j.out

#SBATCH -e slurm-%j.error

#module load BUSCO/5.2.2-foss-2020b

#module load BLAST+/2.11.0-gompi-2020b

#module load AUGUSTUS/3.4.0-foss-2020b

#module load SEPP/4.4.0-foss-2020b

#module load prodigal/2.6.3-GCCcore-10.2.0

#module load HMMER/3.3.2-gompi-2020b

cd /data/putnamlab/jillashey/Apul_Genome/assembly/data

echo "Begin busco on canu-assembled fasta" $(date)

source "/data/putnamlab/shared/busco/scripts/busco_init.sh" # sets up the modules required for this in the right order

busco --config "$EBROOTBUSCO/config/config.ini" -f -c 15 --long -i apul.contigs.fasta -m genome -l /data/putnamlab/shared/busco/downloads/lineages/metazoa_odb10 -o apul.busco.canu

echo "busco complete for canu-assembled fasta" $(date)

Submitted batch job 301594. Failed, same error as before. Going to try copying Kevin and Danielle code directly, even though its a little messy and confusing with paths.

In the script, nano busco_canu.sh

#!/bin/bash

#SBATCH -t 500:00:00

#SBATCH --nodes=1 --ntasks-per-node=15

#SBATCH --export=NONE

#SBATCH --mem=250GB

#SBATCH --mail-type=BEGIN,END,FAIL #email you when job starts, stops and/or fails

#SBATCH --mail-user=jillashey@uri.edu #your email to send notifications

#SBATCH --account=putnamlab

#SBATCH -D /data/putnamlab/jillashey/Apul_Genome/assembly/scripts

#SBATCH -o slurm-%j.out

#SBATCH -e slurm-%j.error

echo "Begin busco on canu-assembled fasta" $(date)

labbase=/data/putnamlab

busco_shared="${labbase}/shared/busco"

[ -z "$query" ] && query="${labbase}/jillashey/Apul_Genome/assembly/data/apul.contigs.fasta" # set this to the query (genome/transcriptome) you are running

[ -z "$db_to_compare" ] && db_to_compare="${busco_shared}/downloads/lineages/metazoa_odb10"

source "${busco_shared}/scripts/busco_init.sh" # sets up the modules required for this in the right order

# This will generate output under your $HOME/busco_output

cd "${labbase}/${Apul_Genome/assembly/data}"

busco --config "$EBROOTBUSCO/config/config.ini" -f -c 20 --long -i "${query}" -l metazoa_odb10 -o apul.busco.canu -m genome

echo "busco complete for canu-assembled fasta" $(date)

Submitted batch job 301599. This appears to have worked! Took about an hour to run. This is the primary result in the out file:

# BUSCO version is: 5.2.2

# The lineage dataset is: metazoa_odb10 (Creation date: 2024-01-08, number of genomes: 65, number of BUSCOs: 954)

# Summarized benchmarking in BUSCO notation for file /data/putnamlab/jillashey/Apul_Genome/assembly/data/apul.contigs.fasta

# BUSCO was run in mode: genome

# Gene predictor used: metaeuk

***** Results: *****

C:94.4%[S:9.4%,D:85.0%],F:2.7%,M:2.9%,n:954

901 Complete BUSCOs (C)

90 Complete and single-copy BUSCOs (S)

811 Complete and duplicated BUSCOs (D)

26 Fragmented BUSCOs (F)

27 Missing BUSCOs (M)

954 Total BUSCO groups searched

We have 94.4% completeness with this assembly but 85% complete and duplicated BUSCOs. The busco manual says this on high levels of duplication: “BUSCO completeness results make sense only in the context of the biology of your organism. You have to understand whether missing or duplicated genes are of biological or technical origin. For instance, a high level of duplication may be explained by a recent whole duplication event (biological) or a chimeric assembly of haplotypes (technical). Transcriptomes and protein sets that are not filtered for isoforms will lead to a high proportion of duplicates. Therefore you should filter them before a BUSCO analysis”.

Danielle also got a high number (78.9%) of duplicated BUSCOs in her de novo transcriptome of Apulchra, but Kevin got much less duplication (6.9%) in his Past transcriptome assembly. I need to ask Danielle if she ended up using her Trinity results (which had a high duplication percentage) for her alignment for Apul. I also need to ask her if she thinks the high duplication percentage is biologically meaningful.

Might be worth running HiFiAdapter Filt

20240215

Last night, the hifiasm job failed after almost 2 days but the email says PREEMPTED, ExitCode0. Two minutes after the job failed, job 300534 started again on the server and it says its a hifiasm job…I did not start this job myself, not sure what happened. Looking on the server now, hifiasm is running but has only been running for about 18 hours (as of 2pm today). It’s the same job number though which is strange.

20240220

Hifiasm job is still running after ~5 days. In the meantime, I’m going to run HiFiAdapterFilt, which is an adapter filtering command for PacBio HiFi data. On the github page, it says that the tool converts .bam to .fastq and removes reads with remnant PacBio adapter sequences. Required dependencies are BamTools and BLAST+; optional dependencies are NCBI FCS Adaptor and pigz. It looks like I’ll need to use the original bam file instead of the converted fastq file.

The github says I should add the script and database to my path using:

export PATH=$PATH:[PATH TO HiFiAdapterFilt]

export PATH=$PATH:[PATH TO HiFiAdapterFilt]/DB

I will do this in the script for the adapter filt code that I write myself. In the scripts folder, make a folder for hifi information

mkdir HiFiAdapterFilt

cd HiFiAdapterFilt

I need to make a script for the hifi adapter code. In the scripts/HiFiAdapterFilt folder, I copy and pasted the linked code into hifiadapterfilt.sh. Make a folder for pacbio databases and copy in the db information from the github.

mkdir DB

cd DB

nano pacbio_vectors_db

>gnl|uv|NGB00972.1:1-45 Pacific Biosciences Blunt Adapter

ATCTCTCTCTTTTCCTCCTCCTCCGTTGTTGTTGTTGAGAGAGAT

>gnl|uv|NGB00973.1:1-35 Pacific Biosciences C2 Primer

AAAAAAAAAAAAAAAAAATTAACGGAGGAGGAGGA

In the scripts/HiFiAdapterFilt folder: nano hifiadapterfilt_JA.sh

#!/bin/bash

#SBATCH -t 500:00:00

#SBATCH --nodes=1 --ntasks-per-node=1

#SBATCH --export=NONE

#SBATCH --mem=500GB

#SBATCH --mail-type=BEGIN,END,FAIL #email you when job starts, stops and/or fails

#SBATCH --mail-user=jillashey@uri.edu #your email to send notifications

#SBATCH --account=putnamlab

#SBATCH -D /data/putnamlab/jillashey/Apul_Genome/assembly/scripts/HiFiAdapterFilt

#SBATCH -o slurm-%j.out

#SBATCH -e slurm-%j.error

module load GCCcore/11.3.0 # need this to resolve conflicts between GCCcore/8.3.0 and loaded GCCcore/11.3.0

module load BamTools/2.5.1-GCC-8.3.0

module load BLAST+/2.9.0-iimpi-2019b

cd /data/putnamlab/jillashey/Apul_Genome/assembly/scripts/HiFiAdapterFilt

echo "Setting paths" $(date)

export PATH=$PATH:[/data/putnamlab/jillashey/Apul_Genome/assembly/scripts/HiFiAdapterFilt] # path to original script

export PATH=$PATH:[/data/putnamlab/jillashey/Apul_Genome/assembly/scripts/HiFiAdapterFilt]/DB # path to db info

echo "Paths set, starting adapter filtering" $(date)

bash hifiadapterfilt.sh -p /data/putnamlab/KITT/hputnam/20240129_Apulchra_Genome_LongRead/m84100_240128_024355_s2.hifi_reads.bc1029 -l 44 -m 97 -o /data/putnamlab/jillashey/Apul_Genome/assembly/data

echo "Completing adapter filtering" $(date)

The -l and -m refer to the minimum length of adapter match to remove and the minumum percent match of adapter to remove, respectively. I left them as the default settings for now. Submitted batch job 303636. Giving me this error:

hifiadapterfilt.sh: line 63: /data/putnamlab/KITT/hputnam/20240129_Apulchra_Genome_LongRead/m84100_240128_024355_s2.hifi_reads.bc1029.temp_file_list: Permission denied

cat: /data/putnamlab/KITT/hputnam/20240129_Apulchra_Genome_LongRead/m84100_240128_024355_s2.hifi_reads.bc1029.bam.temp_file_list: No such file or directory

cat: /data/putnamlab/KITT/hputnam/20240129_Apulchra_Genome_LongRead/m84100_240128_024355_s2.hifi_reads.bc1029.bam.temp_file_list: No such file or directory

Giving me permission denied to write in the folder? I’ll sym link the bam file to my data folder

cd /data/putnamlab/jillashey/Apul_Genome/assembly/data

ln -s /data/putnamlab/KITT/hputnam/20240129_Apulchra_Genome_LongRead/m84100_240128_024355_s2.hifi_reads.bc1029.bam

Editing script so that the prefix is connecting with the sym linked file. Submitted batch job 303637. Still giving me the same error. Hollie may need to give me permission to write and access files in that specific folder.

20240221

Probably need to run haplomerger2, which is installed on the server already.

I’m also looking at the Canu FAQs to see if there is any info about using PacBio HiFi reads. Under the question “What parameters should I use for my reads?”, they have this info:

The defaults for -pacbio-hifi should work on this data. There is still some variation in data quality between samples. If you have poor continuity, it may be because the data is lower quality than expected. Canu will try to auto-adjust the error thresholds for this (which will be included in the report). If that still doesn’t give a good assembly, try running the assembly with -untrimmed. You will likely get a genome size larger than you expect, due to separation of alleles. See My genome size and assembly size are different, help! for details on how to remove this duplication.

When I look at the question “My genome size and assembly size are different, help!”, it says that this difference could be due to a heterozygous genome where the assembly separated some loci or the previous estimate is incorrect. They recommended running BUSCO to check completeness of the assembly (which I already did) and using purge_dups to remove duplication. I will look into this.

Next steps

- Run Canu with

-untrimmedoption - Run purge_dups - targets the removal of duplicated sequences to enhance overall quality of assembly

- Run haplomerger - merges haplotypes to addresws heterozygosity

In the scripts folder: nano canu_untrimmed.sh

#!/bin/bash

#SBATCH -t 500:00:00

#SBATCH --nodes=1 --ntasks-per-node=1

#SBATCH --export=NONE

#SBATCH --mem=500GB

#SBATCH --mail-type=BEGIN,END,FAIL #email you when job starts, stops and/or fails

#SBATCH --mail-user=jillashey@uri.edu #your email to send notifications

#SBATCH --account=putnamlab

#SBATCH -D /data/putnamlab/jillashey/Apul_Genome/assembly/scripts

#SBATCH -o slurm-%j.out

#SBATCH -e slurm-%j.error

module load canu/2.2-GCCcore-11.2.0

cd /data/putnamlab/jillashey/Apul_Genome/assembly/data

#echo "Unzip paco-bio fastq file" $(date)

#gunzip m84100_240128_024355_s2.hifi_reads.bc1029.fastq.fastq.gz

echo "Starting assembly w/ untrimmed flag" $(date)

canu -p apul.canu.untrimmed -d /data/putnamlab/jillashey/Apul_Genome/assembly/data genomeSize=475m -raw -pacbio-hifi m84100_240128_024355_s2.hifi_reads.bc1029.fastq.fastq

echo "Canu assembly complete" $(date)

Submitted batch job 303660

On the purge_dups github, they say to install using the following:

git clone https://github.com/dfguan/purge_dups.git

cd purge_dups/src && make

# only needed if running run_purge_dups.py

git clone https://github.com/dfguan/runner.git

cd runner && python3 setup.py install --user

Cloned both into the assembly folder (ie /data/putnamlab/jillashey/Apul_Genome/assembly/).

First, use pd_config.py to generate a configuration file. Here’s possible usage:

usage: pd_config.py [-h] [-s SRF] [-l LOCD] [-n FN] [--version] ref pbfofn

generate a configuration file in json format

positional arguments:

ref reference file in fasta/fasta.gz format

pbfofn list of pacbio file in fastq/fasta/fastq.gz/fasta.gz format (one absolute file path per line)

optional arguments:

-h, --help show this help message and exit

-s SRF, --srfofn SRF list of short reads files in fastq/fastq.gz format (one record per line, the

record is a tab splitted line of abosulte file path

plus trimmed bases, refer to

https://github.com/dfguan/KMC) [NONE]

-l LOCD, --localdir LOCD

local directory to keep the reference and lists of the

pacbio, short reads files [.]

-n FN, --name FN output config file name [config.json]

--version show program's version number and exit

# Example

./scripts/pd_config.py -l iHelSar1.pri -s 10x.fofn -n config.iHelSar1.PB.asm1.json ~/vgp/release/insects/iHelSar1/iHlSar1.PB.asm1/iHelSar1.PB.asm1.fa.gz pb.fofn

I need to make a list of the pacbio files that I’ll use in the script and put it in a file called pb.fofn.

for filename in *.fastq; do echo $PWD/$filename; done > pb.fofn

In my scripts folder: nano pd_config_apul.py

#!/bin/bash

#SBATCH -t 100:00:00

#SBATCH --nodes=1 --ntasks-per-node=1

#SBATCH --export=NONE

#SBATCH --mem=250GB

#SBATCH --mail-type=BEGIN,END,FAIL #email you when job starts, stops and/or fails

#SBATCH --mail-user=jillashey@uri.edu #your email to send notifications

#SBATCH --account=putnamlab

#SBATCH -D /data/putnamlab/jillashey/Apul_Genome/assembly/scripts

#SBATCH -o slurm-%j.out

#SBATCH -e slurm-%j.error

module load Python/3.9.6-GCCcore-11.2.0 # do i need python?

echo "Making config file for purge dups scripts" $(date)

/data/putnamlab/jillashey/Apul_Genome/assembly/purge_dups/scripts/pd_config.py -l /data/putnamlab/jillashey/Apul_Genome/assembly/data -n config.apul.canu.json /data/putnamlab/jillashey/Apul_Genome/assembly/data/m84100_240128_024355_s2.hifi_reads.bc1029.fastq.fastq /data/putnamlab/jillashey/Apul_Genome/assembly/data/pb.fofn

echo "Config file complete" $(date)

Submitted batch job 303661. Ran in 1 second. Got this in the error file:

cp: ‘/data/putnamlab/jillashey/Apul_Genome/assembly/data/m84100_240128_024355_s2.hifi_reads.bc1029.fastq.fastq’ and ‘/data/putnamlab/jillashey/Apul_Genome/assembly/data/m84100_240128_024355_s2.hifi_reads.bc1029.fastq.fastq’ are the same file

cp: ‘/data/putnamlab/jillashey/Apul_Genome/assembly/data/pb.fofn’ and ‘/data/putnamlab/jillashey/Apul_Genome/assembly/data/pb.fofn’ are the same file

But it did generate a config file, which looks like this:

{

"cc": {

"fofn": "/data/putnamlab/jillashey/Apul_Genome/assembly/data/pb.fofn",

"isdip": 1,

"core": 12,

"mem": 20000,

"queue": "normal",

"mnmp_opt": "",

"bwa_opt": "",

"ispb": 1,

"skip": 0

},

"sa": {

"core": 12,

"mem": 10000,

"queue": "normal"

},

"busco": {

"core": 12,

"mem": 20000,

"queue": "long",

"skip": 0,

"lineage": "mammalia",

"prefix": "m84100_240128_024355_s2.hifi_reads.bc1029.fastq_purged",

"tmpdir": "busco_tmp"

},

"pd": {

"mem": 20000,

"queue": "normal"

},

"gs": {

"mem": 10000,

"oe": 1

},

"kcp": {

"core": 12,

"mem": 30000,

"fofn": "",

"prefix": "m84100_240128_024355_s2.hifi_reads.bc1029.fastq_purged_kcm",

"tmpdir": "kcp_tmp",

"skip": 1

},

"ref": "/data/putnamlab/jillashey/Apul_Genome/assembly/data/m84100_240128_024355_s2.hifi_reads.bc1029.fastq.fastq",

"out_dir": "m84100_240128_024355_s2.hifi_reads.bc1029.fastq"

}

My config file looks basically the same as the example one on the github. Manually edited the config file so that the out_dir was /data/putnamlab/jillashey/Apul_Genome/assembly/data/. Now the purging can begin using run_purge_dups.py. Here’s possible usage:

usage: run_purge_dups.py [-h] [-p PLTFM] [-w WAIT] [-r RETRIES] [--version]

config bin_dir spid

purge_dups wrapper

positional arguments:

config configuration file

bin_dir directory of purge_dups executable files

spid species identifier

optional arguments:

-h, --help show this help message and exit

-p PLTFM, --platform PLTFM

workload management platform, input bash if you want to run locally

-w WAIT, --wait WAIT <int> seconds sleep intervals

-r RETRIES, --retries RETRIES

maximum number of retries

--version show program's version number and exit

# Example

python scripts/run_purge_dups.py config.iHelSar1.json src iHelSar1

In my scripts folder: nano run_purge_dups_apul.py

#!/bin/bash

#SBATCH -t 100:00:00

#SBATCH --nodes=1 --ntasks-per-node=1

#SBATCH --export=NONE

#SBATCH --mem=250GB

#SBATCH --mail-type=BEGIN,END,FAIL #email you when job starts, stops and/or fails

#SBATCH --mail-user=jillashey@uri.edu #your email to send notifications

#SBATCH --account=putnamlab

#SBATCH -D /data/putnamlab/jillashey/Apul_Genome/assembly/scripts

#SBATCH -o slurm-%j.out

#SBATCH -e slurm-%j.error

module load Python/3.9.6-GCCcore-11.2.0 # do i need python?

echo "Starting to purge duplications" $(date)

/data/putnamlab/jillashey/Apul_Genome/assembly/purge_dups/scripts/run_purge_dups.py /data/putnamlab/jillashey/Apul_Genome/assembly/scripts/config.apul.canu.json /data/putnamlab/jillashey/Apul_Genome/assembly/purge_dups/src apul

echo "Duplication purge complete" $(date)

Submitted batch job 303662. Failed immediately with this error:

Traceback (most recent call last):

File "/data/putnamlab/jillashey/Apul_Genome/assembly/purge_dups/scripts/run_purge_dups.py", line 3, in <module>

from runner.manager import manager

ModuleNotFoundError: No module named 'runner'

I installed runner but the code is not seeing it…where am I supposed to put it? inside of the purge_dups github? Okay going to move runner folder inside of the purge_dups folder.

cd /data/putnamlab/jillashey/Apul_Genome/assembly

mv runner/ purge_dups/scripts/

Submitting job again, Submitted batch job 303663. Got the same error. In the run_purge_dups.py script itself, the first few lines are:

#!/usr/bin/env python3

from runner.manager import manager

from runner.hpc import hpc

from multiprocessing import Process, Pool

import sys, os, json

import argparse

So it isn’t seeing that the runner module is there. This issue and this issue on the github were reported but never really answered in a clear way. Will have to look into this more.

In other news, the canu script finished running but looks like it failed. This is the bottom of the error message:

ERROR:

ERROR: Failed with exit code 139. (rc=35584)

ERROR:

ABORT:

ABORT: canu 2.2

ABORT: Don't panic, but a mostly harmless error occurred and Canu stopped.

ABORT: Try restarting. If that doesn't work, ask for help.

ABORT:

ABORT: failed to configure the overlap store.

ABORT:

ABORT: Disk space available: 8477.134 GB

ABORT:

ABORT: Last 50 lines of the relevant log file (unitigging/apul.canu.untrimmed.ovlStore.config.err):

ABORT:

ABORT:

ABORT: Finding number of overlaps per read and per file.

ABORT:

ABORT: Moverlaps

ABORT: ------------ ----------------------------------------

ABORT:

ABORT: Failed with 'Segmentation fault'; backtrace (libbacktrace):

ABORT:

Unsure what it means…

20240301

BIG NEWS!!!!! This week, a paper came out that assembled and annotated the Orbicella faveolata genome using PacBio HiFi reads (Young et al. 2024)!!!!!!! The github for this paper has a detailed pipeline for how the genome was put together. Since I am also using HiFi reads, I will be following their methodology! I am using this pipeline starting at line 260.

I changed the file from bam to fastq, but now I need to change it to fasta with seqtk. In the scripts folder: nano seqtk.sh

#!/bin/bash

#SBATCH -t 100:00:00

#SBATCH --nodes=1 --ntasks-per-node=10

#SBATCH --export=NONE

#SBATCH --mem=500GB

#SBATCH --mail-type=BEGIN,END,FAIL #email you when job starts, stops and/or fails

#SBATCH --mail-user=jillashey@uri.edu #your email to send notifications

#SBATCH --account=putnamlab

#SBATCH -D /data/putnamlab/jillashey/Apul_Genome/assembly/scripts

#SBATCH -o slurm-%j.out

#SBATCH -e slurm-%j.error

module load seqtk/1.3-GCC-9.3.0

echo "Convert PacBio fastq file to fasta file" $(date)

cd /data/putnamlab/jillashey/Apul_Genome/assembly/data

seqtk seq -a m84100_240128_024355_s2.hifi_reads.bc1029.fastq.fastq > m84100_240128_024355_s2.hifi_reads.bc1029.fasta

echo "Fastq to fasta complete! Summarize read lengths" $(date)

awk '/^>/{printf("%s\t",substr($0,2));next;} {print length}' m84100_240128_024355_s2.hifi_reads.bc1029.fasta > rr_read_lengths.txt

echo "Read length summary complete" $(date)

Submitted batch job 304257.

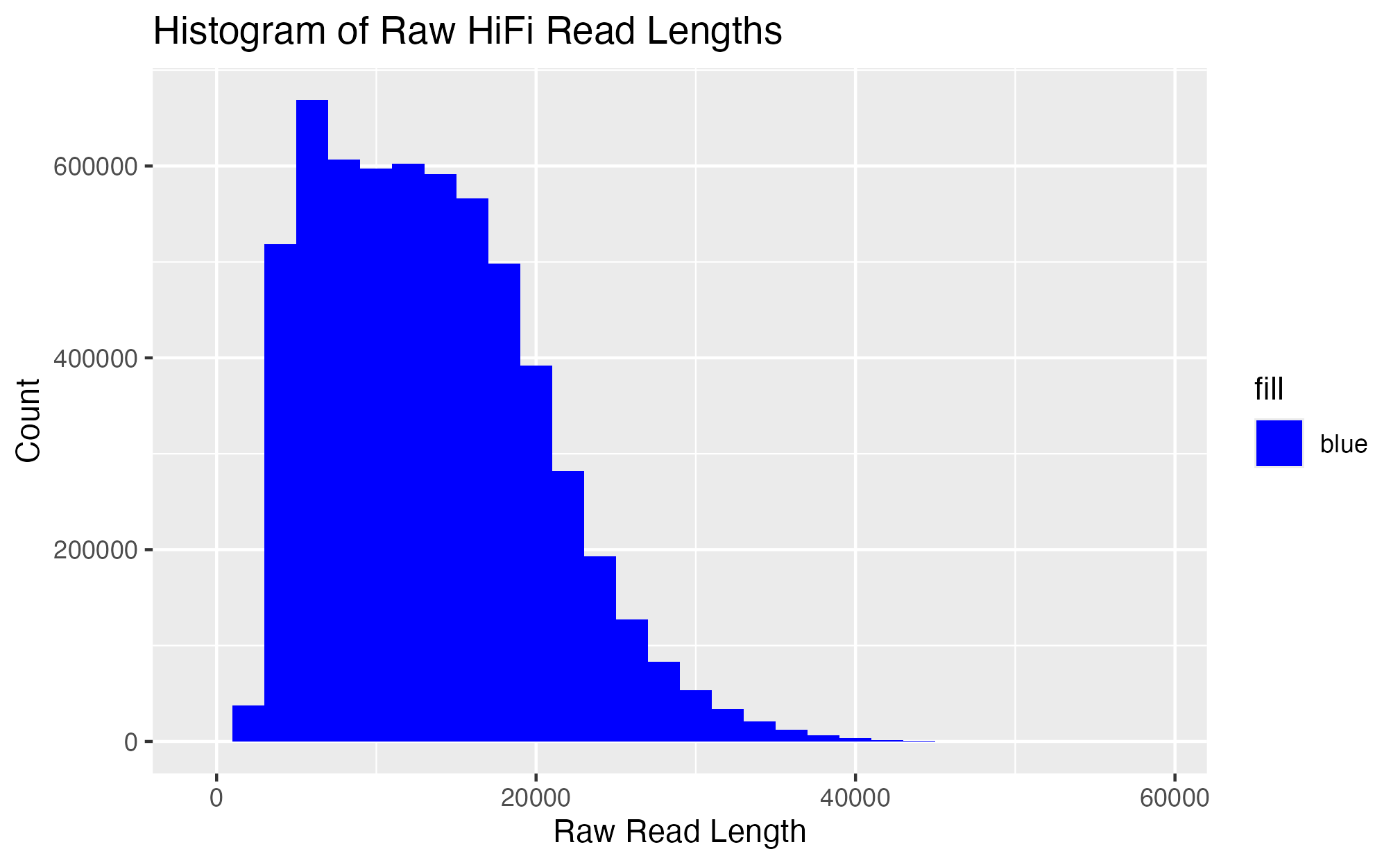

In R, I looked at the data to quantify length for each read. See code here.

```{r, echo=F} read.table(file = “../data/rr_read_lengths.txt”, header = F) %>% dplyr::rename(“hifi_read_name” = 1, “length” = 2) -> hifi_read_length nrow(hifi_read_length) # 5,898,386 total reads mean(hifi_read_length$length) # mean length of reads is 13,424.64 sum(hifi_read_length$length) #length sum 79,183,709,778. Will need this for the NCBI submission

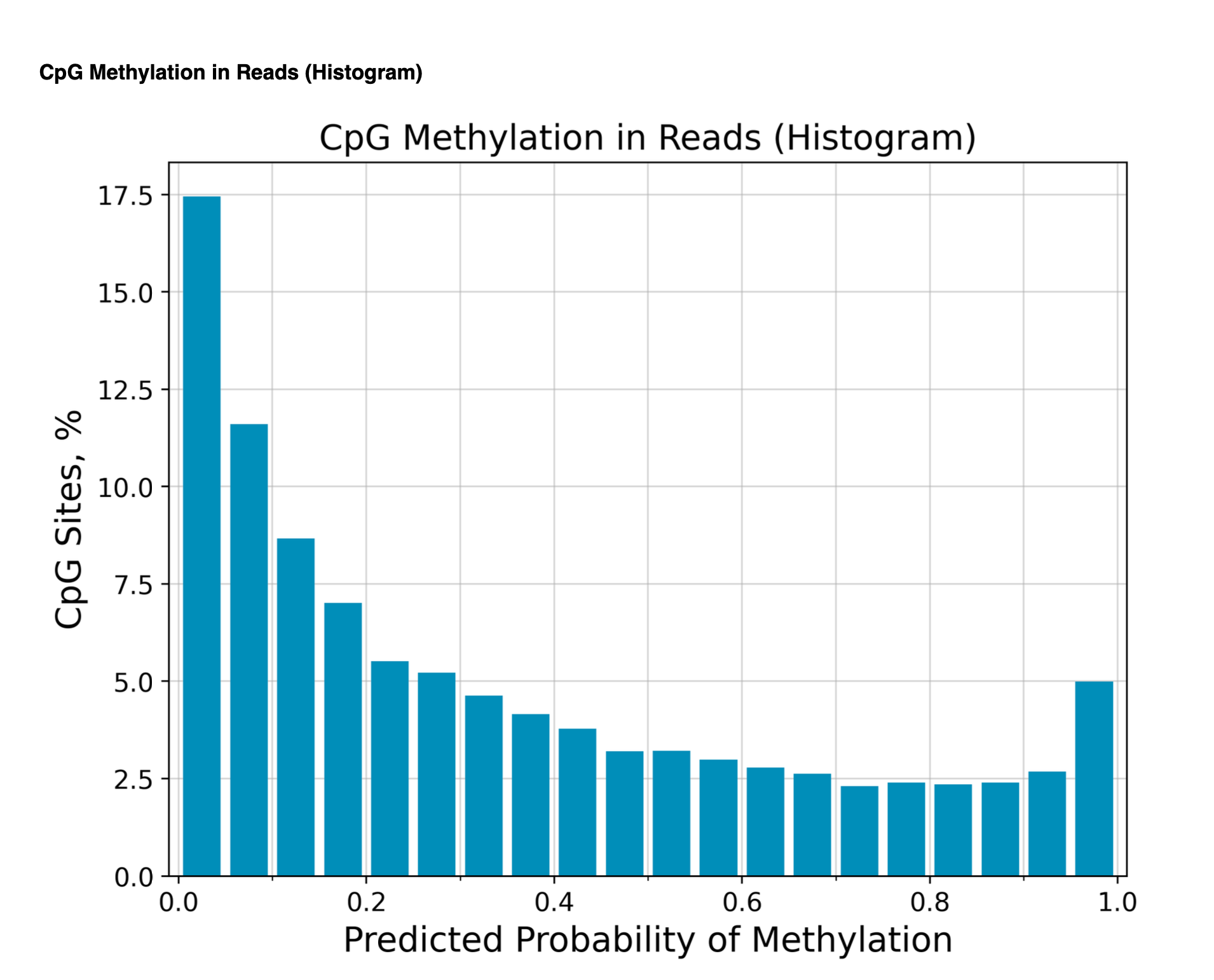

Make histogram for read bins from raw hifi data

```{r, echo = F}

ggplot(data = hifi_read_length,

aes(x = length, fill = "blue")) +

geom_histogram(binwidth = 2000) +

labs(x = "Raw Read Length", y = "Count", title = "Histogram of Raw HiFi Read Lengths") +

scale_fill_manual(values = c("blue")) +

scale_y_continuous(labels = function(x) format(x, scientific = FALSE))

20240303

My next step is to remove any contaminant reads from the raw hifi reads. From Young et al. 2024: “Raw HiFi reads first underwent a contamination screening, following the methodology in [68], using BLASTn [32, 68] against the assembled mitochondrial O. faveolata genome and the following databases: common eukaryote contaminant sequences (ftp.ncbi.nlm.nih. gov/pub/kitts/contam_in_euks.fa.gz), NCBI viral (ref_ viruses_rep_genomes) and prokaryote (ref_prok_rep_ genomes) representative genome sets”.

I tried to run the update_blastdb.pl script (included in the blast program) with the BLAST+/2.13.0-gompi-2022a module but I got this error:

Can't locate Archive/Tar.pm in @INC (@INC contains: /usr/local/lib64/perl5 /usr/local/share/perl5 /usr/lib64/perl5/vendor_perl /usr/share/perl5/vendor_perl /usr/lib64/perl5 /usr/share/perl5 .) at /opt/software/BLAST+/2.13.0-gompi-2022a/bin/update_blastdb.pl line 41.

BEGIN failed--compilation aborted at /opt/software/BLAST+/2.13.0-gompi-2022a/bin/update_blastdb.pl line 41.

Not sure what this means…will email Kevin Bryan to ask about it, as I don’t want to mess with anything on the installed modules. I did download the contam_in_euks.fa.gz db to my computer so I’m going to copy it to Andromeda. This file is considerably smaller than the viral or prok dbs.

Make a database folder in the Apul genome folder.

cd /data/putnamlab/jillashey/Apul_Genome

mkdir dbs

cd dbs

zgrep -c ">" contam_in_euks.fa

3554

Now I ran run a script that blasts the pacbio fasta against these sequences. In /data/putnamlab/jillashey/Apul_Genome/assembly/scripts, nano blast_contam_euk.sh

#!/bin/bash

#SBATCH -t 100:00:00

#SBATCH --nodes=1 --ntasks-per-node=10

#SBATCH --export=NONE

#SBATCH --mem=500GB

#SBATCH --mail-type=BEGIN,END,FAIL #email you when job starts, stops and/or fails

#SBATCH --mail-user=jillashey@uri.edu #your email to send notifications

#SBATCH --account=putnamlab

#SBATCH -D /data/putnamlab/jillashey/Apul_Genome/assembly/scripts

#SBATCH -o slurm-%j.out

#SBATCH -e slurm-%j.error

module load BLAST+/2.13.0-gompi-2022a

echo "BLASTing hifi fasta against eukaryote contaminant sequences" $(date)

cd /data/putnamlab/jillashey/Apul_Genome/assembly/data

blastn -query m84100_240128_024355_s2.hifi_reads.bc1029.fasta -subject /data/putnamlab/jillashey/Apul_Genome/dbs/contam_in_euks.fa -task megablast -outfmt 6 -evalue 4 -perc_identity 90 -num_threads 15 -out contaminant_hits_euks_rr.txt

echo "BLAST complete, remove contaminant seqs from hifi fasta" $(date)

awk '{ if( ($4 >= 50 && $4 <= 99 && $3 >=98 ) ||

($4 >= 100 && $4 <= 199 && $3 >= 94 ) ||

($4 >= 200 && $3 >= 90) ) {print $0}

}' contaminant_hits_euks_rr.txt > contaminants_pass_filter_euks_rr.txt

echo "Contaminant seqs removed from hifi fasta" $(date)

Submitted batch job 304389. Finished in about 2.5 hours. Looked at the output in R (code here).

20240304

Emailed Kevin Bryan this morning and asked if he knew anything about why the update_blastdb.pl wasn’t working. Still waiting to hear back from him.

Kevin Bryan also emailed me this morning about my hifiasm job that has been running for 18 days and said: “This job has been running for 18 days. I just took a look at it and it appears you didn’t specify -t $SLURM_CPUS_ON_NODE (and also #SBATCH --exclusive) to make use of all of the CPU cores on the node. You might want to consider re-submitting this job with those parameters. Because the nodes generally have 36 cores, it should be able to catch up to where it is now in a little over half a day, assuming perfect scaling.”

I need to add those parameters into the hifiasm code, so cancelling the 300534 job. In /data/putnamlab/jillashey/Apul_Genome/assembly/scripts, nano hifiasm.sh:

#!/bin/bash -i

#SBATCH -t 30-00:00:00

#SBATCH --nodes=1 --ntasks-per-node=36

#SBATCH --export=NONE

#SBATCH --mem=500GB

#SBATCH --mail-type=BEGIN,END,FAIL #email you when job starts, stops and/or fails

#SBATCH --mail-user=jillashey@uri.edu #your email to send notifications

#SBATCH --account=putnamlab

#SBATCH --exclusive

#SBATCH -D /data/putnamlab/jillashey/Apul_Genome/assembly/scripts

#SBATCH -o slurm-%j.out

#SBATCH -e slurm-%j.error

conda activate /data/putnamlab/conda/hifiasm

cd /data/putnamlab/jillashey/Apul_Genome/assembly/data

echo "Starting assembly with hifiasm" $(date)

hifiasm -o apul.hifiasm m84100_240128_024355_s2.hifi_reads.bc1029.fastq.fastq -t 36

echo "Assembly with hifiasm complete!" $(date)

conda deactivate

Submitted batch job 304463

20240304

Response from Kevin Bryan about viral and prok blast databases: “Ok, I downloaded those databases, and actually consolidated the rest of them into /data/shared/ncbi-db/, under which is a directory for today, and then there will be a new one next Sunday and following Sundays. There’s a file /data/shared/ncbi-db/.ncbirc that gets updated to point to the current directory, which the blast* tools will automatically pick up, so you can just do -db ref_prok_rep_ genomes, for example.

For other tools that can read the ncbi databases, you can use blastdb_path -db ref_viruses_rep_genomes to get the path, although for some reason with the nr database you need to specify -dbtype prot, i.e., blastdb_path -db nr -dbtype prot.

The reason for the extra complication is because otherwise a job that runs while the database is being updated may fail or return strange results. The dated directories should resolve this issue. Note that Unity blast-plus modules work in a similar way with a different path, /datasets/bio/ncbi-db.”

Amazing! Now I can move forward with the blasting against viral and prok genomes. In the scripts folder: nano blastn_viral.sh

#!/bin/bash

#SBATCH -t 30-00:00:00

#SBATCH --nodes=1 --ntasks-per-node=36

#SBATCH --export=NONE

#SBATCH --mem=500GB

#SBATCH --mail-type=BEGIN,END,FAIL #email you when job starts, stops and/or fails

#SBATCH --mail-user=jillashey@uri.edu #your email to send notifications

#SBATCH --account=putnamlab

#SBATCH --exclusive

#SBATCH -D /data/putnamlab/jillashey/Apul_Genome/assembly/scripts

#SBATCH -o slurm-%j.out

#SBATCH -e slurm-%j.error

module load BLAST+/2.13.0-gompi-2022a

cd /data/putnamlab/jillashey/Apul_Genome/assembly/data

echo "Blasting hifi reads against viral genomes to look for contaminants" $(date)

blastn -query m84100_240128_024355_s2.hifi_reads.bc1029.fasta -db ref_viruses_rep_genomes -outfmt 6 -evalue 1e-4 -perc_identity 90 -out viral_contaminant_hits_rr.txt

echo "Blast complete!" $(date)

Submitted batch job 304500

In the scripts folder: nano blastn_prok.sh

#!/bin/bash

#SBATCH -t 30-00:00:00

#SBATCH --nodes=1 --ntasks-per-node=36

#SBATCH --export=NONE

#SBATCH --mem=500GB

#SBATCH --mail-type=BEGIN,END,FAIL #email you when job starts, stops and/or fails

#SBATCH --mail-user=jillashey@uri.edu #your email to send notifications

#SBATCH --account=putnamlab

#SBATCH --exclusive

#SBATCH -D /data/putnamlab/jillashey/Apul_Genome/assembly/scripts

#SBATCH -o slurm-%j.out

#SBATCH -e slurm-%j.error

module load BLAST+/2.13.0-gompi-2022a

cd /data/putnamlab/jillashey/Apul_Genome/assembly/data

echo "Blasting hifi reads against prokaryote genomes to look for contaminants" $(date)

blastn -query m84100_240128_024355_s2.hifi_reads.bc1029.fasta -db ref_prok_rep_genomes -outfmt 6 -evalue 1e-4 -perc_identity 90 -out prok_contaminant_hits_rr.txt

echo "Blast complete!" $(date)

Submitted batch job 304502

20240306

Making list of all software programs that Young et al. 2024 used and if they are on Andromeda

- blastn

- On Andromeda? YES

- Meryl

- On Andromeda? NO

- Genome-Scope2

- On Andromeda? NO

- Hifiasm

- On Andromeda? NO but I added it to

putnamlabvia conda

- On Andromeda? NO but I added it to

- Quast

- On Andromeda? YES

- Busco

- On Andromeda? YES

- Merqury

- On Andromeda? NO

- RepeatModeler2

- On Andromeda? NO

- Repeat-Masker

- On Andromeda? YES

- TeloScafs

- On Andromeda? NO

- PASA

- On Andromeda? NO

- funnannotate

- On Andromeda? NO

- Augustus

- On Andromeda? YES

- GeneMark-ES/ET

- On Andromeda? YES but only GeneMark-ET

- snap

- On Andromeda? YES

- glimmerhmm

- On Andromeda? NO

- Evidence Modeler

- On Andromeda? NO

- tRNAscan-SE

- On Andromeda? YES

- Trinity

- On Andromeda? Yes

- InterproScan

- On Andromeda? YES

20240311

Hifiasm (with unfiltered reads) finished running over the weekend and the prok blast script preemptively ended and then restarted in the early hours of this morning. I think this might be because I am not making use of all cores on the node (similar to my earlier hifiasm script). I cancelled the prok blast job (304502) and edited the script so that it includes the flag -num_threads 36. Submitted batch job 305351

It created many files:

-rw-r--r--. 1 jillashey 19G Mar 7 23:58 apul.hifiasm.ec.bin

-rw-r--r--. 1 jillashey 47G Mar 8 00:08 apul.hifiasm.ovlp.source.bin

-rw-r--r--. 1 jillashey 17G Mar 8 00:12 apul.hifiasm.ovlp.reverse.bin

-rw-r--r--. 1 jillashey 1.2G Mar 8 01:37 apul.hifiasm.bp.r_utg.gfa

-rw-r--r--. 1 jillashey 21M Mar 8 01:37 apul.hifiasm.bp.r_utg.noseq.gfa

-rw-r--r--. 1 jillashey 8.6M Mar 8 01:41 apul.hifiasm.bp.r_utg.lowQ.bed

-rw-r--r--. 1 jillashey 1.1G Mar 8 01:42 apul.hifiasm.bp.p_utg.gfa

-rw-r--r--. 1 jillashey 21M Mar 8 01:42 apul.hifiasm.bp.p_utg.noseq.gfa

-rw-r--r--. 1 jillashey 8.2M Mar 8 01:46 apul.hifiasm.bp.p_utg.lowQ.bed

-rw-r--r--. 1 jillashey 506M Mar 8 01:47 apul.hifiasm.bp.p_ctg.gfa

-rw-r--r--. 1 jillashey 11M Mar 8 01:47 apul.hifiasm.bp.p_ctg.noseq.gfa

-rw-r--r--. 1 jillashey 2.0M Mar 8 01:49 apul.hifiasm.bp.p_ctg.lowQ.bed

-rw-r--r--. 1 jillashey 469M Mar 8 01:50 apul.hifiasm.bp.hap1.p_ctg.gfa

-rw-r--r--. 1 jillashey 9.9M Mar 8 01:50 apul.hifiasm.bp.hap1.p_ctg.noseq.gfa

-rw-r--r--. 1 jillashey 2.0M Mar 8 01:52 apul.hifiasm.bp.hap1.p_ctg.lowQ.bed

-rw-r--r--. 1 jillashey 468M Mar 8 01:52 apul.hifiasm.bp.hap2.p_ctg.gfa

-rw-r--r--. 1 jillashey 9.9M Mar 8 01:52 apul.hifiasm.bp.hap2.p_ctg.noseq.gfa

-rw-r--r--. 1 jillashey 1.9M Mar 8 01:54 apul.hifiasm.bp.hap2.p_ctg.lowQ.bed

This page gives a brief overview of the hifiasm output files, which is super helpful. It generates the assembly graphs in Graphical Fragment Assembly (GFA) format.

- prefix.r_utg.gfa: haplotype-resolved raw unitig graph. This graph keeps all haplotype information.

- A unitig is a portion of a contig. It is a nondisputed and assembled group of fragments. A contiguous sequence of ordered unitigs is a contig, and a single unitig can be in multiple contigs.

- prefix.p_utg.gfa: haplotype-resolved processed unitig graph without small bubbles. Small bubbles might be caused by somatic mutations or noise in data, which are not the real haplotype information. Hifiasm automatically pops such small bubbles based on coverage. The option –hom-cov affects the result. See homozygous coverage setting for more details. In addition, the option -p forcedly pops bubbles.

- Confused about the bubbles, but it looks like a medium level (what is a “medium” level”?) of heterozygosity will result in bubbles (see image in this post)

- Homozygous coverage refers to coverage threshold for homozygous reads. Hifiasm prints it as:

[M::purge_dups] homozygous read coverage threshold: X. If it is not around homozygous coverage, the final assembly might be either too large or too small.

- prefix.p_ctg.gfa: assembly graph of primary contigs. This graph includes a complete assembly with long stretches of phased blocks.

- From my understanding based on this post discussing concepts in phased assemblies, a phased assembly identifies different alleles

- prefix.a_ctg.gfa: assembly graph of alternate contigs. This graph consists of all contigs that are discarded in primary contig graph.

- There were none of these files in my output. Does this mean all contigs created were used in the final assembly?

- prefix.hap*.p_ctg.gfa: phased contig graph. This graph keeps the phased contigs for haplotype 1 and haplotype 2.

I believe this file (apul.hifiasm.bp.p_ctg.gfa) contains the sequence information for the assembled contigs. zgrep -c "S" apul.hifiasm.bp.p_ctg.gfa showed that there were 188 Segments, or continuous sequences, in this assembly, meaning there are 188 contigs (to my understanding). This file (apul.hifiasm.bp.p_ctg.noseq.gfa) contains information about the reads used to construct the contigs in a plain text format.

head apul.hifiasm.bp.p_ctg.noseq.gfa

S ptg000001l * LN:i:21642937 rd:i:82

A ptg000001l 0 + m84100_240128_024355_s2/165481517/ccs 0 25806 id:i:5084336 HG:A:a

A ptg000001l 6512 + m84100_240128_024355_s2/221910786/ccs 0 21626 id:i:5609370 HG:A:a

A ptg000001l 7287 - m84100_240128_024355_s2/128778986/ccs 0 24026 id:i:2691352 HG:A:a

A ptg000001l 7493 + m84100_240128_024355_s2/262476615/ccs 0 30540 id:i:287120 HG:A:a

A ptg000001l 15042 + m84100_240128_024355_s2/191824085/ccs 0 27619 id:i:2783058 HG:A:a

A ptg000001l 16616 - m84100_240128_024355_s2/29886096/ccs 0 28664 id:i:2336674 HG:A:a

A ptg000001l 17527 - m84100_240128_024355_s2/37553051/ccs 0 31883 id:i:2336554 HG:A:a

A ptg000001l 21104 - m84100_240128_024355_s2/242419829/ccs 0 28551 id:i:5120251 HG:A:a

A ptg000001l 21788 + m84100_240128_024355_s2/266536351/ccs 0 27994 id:i:5577462 HG:A:a

The S line is the Segment and it acts as a header for the the contig. LN:i: is the segment length (in this case, 21,642,937 bp). The rd:i: is the read coverage, calculated by the reads coming from the same contig (in this case, read coverage is 82, which is high). The A lines provide information about the sequences that make up the contig. Here’s what each column means

- Column 1: should always be A

- Column 2: contig name

- Column 3: contig start coordinate of subregion constructed by read

- Column 4: read strand (+ or -)

- Column 5: read name

- Column 6: read start coordinate of subregion which is used to construct contig

- Column 7: read end coordinate of subregion which is used to construct contig

- Column 8: read ID

- Column 9: haplotype status of read.

HG:A:a,HG:A:p, andHG:A:mindicate that the read is non-binnable (ie heterozygous), father/hap1 specific, or mother/hap2 specific.

If I’m interpreting this correctly, it looks like most of the reads in the first contig are heterozygous.

The error file (slurm-304463.error) for this script contains the histogram of the kmers. It has 4 iterations of kmer histograms with some sort of analysis in between the histograms. Here’s what the last histogram looks like:

[M::ha_analyze_count] lowest: count[9] = 2315

[M::ha_analyze_count] highest: count[83] = 417609

[M::ha_hist_line] 1: ****************************************************************************************************> 1732549

[M::ha_hist_line] 2: ************* 53793

[M::ha_hist_line] 3: **** 16969

[M::ha_hist_line] 4: ** 9184

[M::ha_hist_line] 5: * 5507

[M::ha_hist_line] 6: * 4361

[M::ha_hist_line] 7: * 3389

[M::ha_hist_line] 8: * 2959

[M::ha_hist_line] 9: * 2315

[M::ha_hist_line] 10: * 2369

[M::ha_hist_line] 11: 2037

[M::ha_hist_line] 12: 1889

[M::ha_hist_line] 13: 1724

[M::ha_hist_line] 14: 1729

[M::ha_hist_line] 15: 1721

[M::ha_hist_line] 16: 1641

[M::ha_hist_line] 17: 1530

[M::ha_hist_line] 18: 1687

[M::ha_hist_line] 19: 1389

[M::ha_hist_line] 20: 1341

[M::ha_hist_line] 21: 1370

[M::ha_hist_line] 22: 1269

[M::ha_hist_line] 23: 1249

[M::ha_hist_line] 24: 1329

[M::ha_hist_line] 25: 1327

[M::ha_hist_line] 26: 1317

[M::ha_hist_line] 27: 1274

[M::ha_hist_line] 28: 1370

[M::ha_hist_line] 29: 1495

[M::ha_hist_line] 30: 1468

[M::ha_hist_line] 31: 1677

[M::ha_hist_line] 32: 1625

[M::ha_hist_line] 33: 1729

[M::ha_hist_line] 34: 1697

[M::ha_hist_line] 35: 1825

[M::ha_hist_line] 36: 1919

[M::ha_hist_line] 37: 1966

[M::ha_hist_line] 38: 2066

[M::ha_hist_line] 39: * 2101

[M::ha_hist_line] 40: * 2195

[M::ha_hist_line] 41: * 2119

[M::ha_hist_line] 42: * 2100

[M::ha_hist_line] 43: * 2325

[M::ha_hist_line] 44: * 2644

[M::ha_hist_line] 45: * 2807

[M::ha_hist_line] 46: * 3080

[M::ha_hist_line] 47: * 3289

[M::ha_hist_line] 48: * 3661

[M::ha_hist_line] 49: * 3984

[M::ha_hist_line] 50: * 4856

[M::ha_hist_line] 51: * 5391

[M::ha_hist_line] 52: ** 6627

[M::ha_hist_line] 53: ** 7648

[M::ha_hist_line] 54: ** 9319

[M::ha_hist_line] 55: *** 11051

[M::ha_hist_line] 56: *** 13316

[M::ha_hist_line] 57: **** 16452

[M::ha_hist_line] 58: ***** 19650

[M::ha_hist_line] 59: ****** 24229

[M::ha_hist_line] 60: ******* 29998

[M::ha_hist_line] 61: ********* 37438

[M::ha_hist_line] 62: *********** 45813

[M::ha_hist_line] 63: ************* 54367

[M::ha_hist_line] 64: **************** 67165

[M::ha_hist_line] 65: ******************* 79086

[M::ha_hist_line] 66: *********************** 95901

[M::ha_hist_line] 67: *************************** 111990

[M::ha_hist_line] 68: ******************************* 128413

[M::ha_hist_line] 69: *********************************** 147679

[M::ha_hist_line] 70: ***************************************** 171224

[M::ha_hist_line] 71: ********************************************** 193638

[M::ha_hist_line] 72: **************************************************** 217984

[M::ha_hist_line] 73: ********************************************************** 242258

[M::ha_hist_line] 74: **************************************************************** 266643

[M::ha_hist_line] 75: ********************************************************************** 291355

[M::ha_hist_line] 76: **************************************************************************** 317522

[M::ha_hist_line] 77: ********************************************************************************* 337960

[M::ha_hist_line] 78: ************************************************************************************** 358656

[M::ha_hist_line] 79: ******************************************************************************************* 378437

[M::ha_hist_line] 80: ********************************************************************************************** 393447

[M::ha_hist_line] 81: ************************************************************************************************* 405399

[M::ha_hist_line] 82: *************************************************************************************************** 413774

[M::ha_hist_line] 83: **************************************************************************************************** 417609

[M::ha_hist_line] 84: **************************************************************************************************** 416365

[M::ha_hist_line] 85: **************************************************************************************************** 417459

[M::ha_hist_line] 86: *************************************************************************************************** 413637

[M::ha_hist_line] 87: ************************************************************************************************* 404341

[M::ha_hist_line] 88: ********************************************************************************************* 387519

[M::ha_hist_line] 89: ***************************************************************************************** 372331

[M::ha_hist_line] 90: ************************************************************************************ 352702

[M::ha_hist_line] 91: ******************************************************************************** 333308

[M::ha_hist_line] 92: ************************************************************************* 305452

[M::ha_hist_line] 93: ******************************************************************* 279706

[M::ha_hist_line] 94: ************************************************************** 257317

[M::ha_hist_line] 95: ******************************************************* 230115

[M::ha_hist_line] 96: ************************************************* 205580

[M::ha_hist_line] 97: ******************************************* 181564

[M::ha_hist_line] 98: ************************************** 159113

[M::ha_hist_line] 99: ********************************* 139005

[M::ha_hist_line] 100: ***************************** 120518

[M::ha_hist_line] 101: ************************* 102686

[M::ha_hist_line] 102: ********************* 86025

[M::ha_hist_line] 103: ****************** 73567

[M::ha_hist_line] 104: *************** 61207

[M::ha_hist_line] 105: ************ 50380

[M::ha_hist_line] 106: ********** 41491

[M::ha_hist_line] 107: ******** 34384

[M::ha_hist_line] 108: ******* 28223

[M::ha_hist_line] 109: ***** 22483

[M::ha_hist_line] 110: **** 18607

[M::ha_hist_line] 111: **** 14975

[M::ha_hist_line] 112: *** 12513

[M::ha_hist_line] 113: ** 10316

[M::ha_hist_line] 114: ** 8237

[M::ha_hist_line] 115: ** 6969

[M::ha_hist_line] 116: * 6015

[M::ha_hist_line] 117: * 5348

[M::ha_hist_line] 118: * 4850

[M::ha_hist_line] 119: * 4508

[M::ha_hist_line] 120: * 4436

[M::ha_hist_line] 121: * 4296

[M::ha_hist_line] 122: * 4655

[M::ha_hist_line] 123: * 4414

[M::ha_hist_line] 124: * 4850

[M::ha_hist_line] 125: * 5053

[M::ha_hist_line] 126: * 5326

[M::ha_hist_line] 127: * 6256

[M::ha_hist_line] 128: ** 6763

[M::ha_hist_line] 129: ** 7359

[M::ha_hist_line] 130: ** 8371

[M::ha_hist_line] 131: ** 9116

[M::ha_hist_line] 132: ** 10114

[M::ha_hist_line] 133: *** 11557

[M::ha_hist_line] 134: *** 12951

[M::ha_hist_line] 135: *** 14573

[M::ha_hist_line] 136: **** 16195

[M::ha_hist_line] 137: **** 17982

[M::ha_hist_line] 138: ***** 19859

[M::ha_hist_line] 139: ***** 22041

[M::ha_hist_line] 140: ****** 24033

[M::ha_hist_line] 141: ****** 26500

[M::ha_hist_line] 142: ******* 30035

[M::ha_hist_line] 143: ******** 32677

[M::ha_hist_line] 144: ********* 36297

[M::ha_hist_line] 145: ********* 39324

[M::ha_hist_line] 146: ********** 43146

[M::ha_hist_line] 147: *********** 47105

[M::ha_hist_line] 148: ************ 52168

[M::ha_hist_line] 149: ************* 55935

[M::ha_hist_line] 150: *************** 61001

[M::ha_hist_line] 151: **************** 65990

[M::ha_hist_line] 152: ***************** 70743

[M::ha_hist_line] 153: ****************** 74363

[M::ha_hist_line] 154: ******************* 79804

[M::ha_hist_line] 155: ******************** 83768

[M::ha_hist_line] 156: ********************* 88057

[M::ha_hist_line] 157: ********************** 92818

[M::ha_hist_line] 158: *********************** 97623

[M::ha_hist_line] 159: ************************* 103918

[M::ha_hist_line] 160: ************************** 107072

[M::ha_hist_line] 161: ************************** 110105

[M::ha_hist_line] 162: *************************** 113902

[M::ha_hist_line] 163: **************************** 117243

[M::ha_hist_line] 164: **************************** 118933

[M::ha_hist_line] 165: ***************************** 122058

[M::ha_hist_line] 166: ****************************** 123371

[M::ha_hist_line] 167: ****************************** 125091

[M::ha_hist_line] 168: ****************************** 125263

[M::ha_hist_line] 169: ****************************** 125254

[M::ha_hist_line] 170: ****************************** 123856

[M::ha_hist_line] 171: ****************************** 123656

[M::ha_hist_line] 172: ***************************** 121423

[M::ha_hist_line] 173: ***************************** 121400

[M::ha_hist_line] 174: **************************** 117980

[M::ha_hist_line] 175: **************************** 115344

[M::ha_hist_line] 176: *************************** 112655

[M::ha_hist_line] 177: ************************** 109202

[M::ha_hist_line] 178: ************************* 105297

[M::ha_hist_line] 179: ************************ 102172

[M::ha_hist_line] 180: *********************** 97507

[M::ha_hist_line] 181: ********************** 93418

[M::ha_hist_line] 182: ********************* 88400

[M::ha_hist_line] 183: ******************** 83674

[M::ha_hist_line] 184: ******************* 77971

[M::ha_hist_line] 185: ***************** 72480

[M::ha_hist_line] 186: **************** 68366

[M::ha_hist_line] 187: *************** 63165

[M::ha_hist_line] 188: ************** 58702

[M::ha_hist_line] 189: ************* 54012

[M::ha_hist_line] 190: ************ 50360